In his pursuit of wisdom, Peter Bevelin was inspired by Charlie Munger’s idea:

I believe in the discipline of mastering the best of what other people have ever figured out.

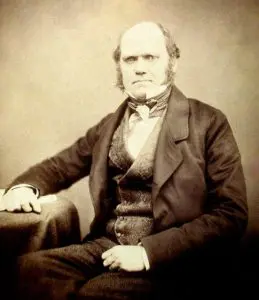

Bevelin was also influenced by Munger’s statement that Charles Darwin was one of the best thinkers who ever lived. Despite the fact that many others had much higher IQ’s. Bevelin:

Darwin’s lesson is that even people who aren’t geniuses can outthink the rest of mankind if they develop certain thinking habits.

(Photo by Maull and Polyblank (1855), via Wikimedia Commons)

In the spirit of Darwin and Munger, and with the goal of gaining a better understanding of human behavior, Bevelin read books in biology, psychology, neuroscience, physics, and mathematics. Bevelin took extensive notes. The result is the book,Seeking Wisdom: From Darwin to Munger.

Here’s the outline:

PART ONE: WHAT INFLUENCES OUR THINKING

- Our anatomy sets the limits for our behavior

- Evolution selected the connections that produce useful behavior for survival and reproduction

- Adaptive behavior for survival and reproduction

PART TWO: THE PSYCHOLOGY OF MISJUDGMENTS

- Misjudgments explained by psychology

- Psychological reasons for mistakes

PART THREE: THE PHYSICS AND MATHEMATICS OF MISJUDGMENTS

- Systems thinking

- Scale and limits

- Causes

- Numbers and their meaning

- Probabilities and number of possible outcomes

- Scenarios

- Coincidences and miracles

- Reliability of case evidence

- Misrepresentative evidence

PART FOUR: GUIDELINES TO BETTER THINKING

- Models of reality

- Meaning

- Simplification

- Rules and filters

- Goals

- Alternatives

- Consequences

- Quantification

- Evidence

- Backward thinking

- Risk

- Attitudes

(Photo by Nick Webb)

Part One: What Influences Our Thinking

OUR ANATOMY SETS THE LIMITS FOR OUR BEHAVIOR

Bevelin quotes Nobel Laureate Dr. Gerald Edelman:

The brain is the most complicated material object in the known universe. If you attempted to count the number of connections, one per second, in the mantle of the brain (the cerebral cortex), you would finish counting 32 million years later. But that is not the whole story. The way the brain is connected–its neuroanatomical pattern–is enormously intricate. Within this anatomy a remarkable set of dynamic events take place in hundredths of a second and the number of levels controlling these events, from molecules to behavior, is quite large.

Neurons can send signals–electrochemical pulses–to specific target cells over long distances. These signals are sent by axons, thin fibers that extend from neurons to other parts of the brain. Axons can be quite long.

(Illustration by ustas)

Some neurons emit electrochemical pulses constantly while other neurons are quiet most of the time. A single axon can have several thousand synaptic connections. When an electrochemical pulse travels along an axon and reaches a synapse, it causes a neurotransmitter (a chemical) to be released.

The human brain contains approximately 100 trillion synapses. From wikipedia:

The functions of these synapses are very diverse: some are excitatory (exciting the target cell); others are inhibitory; others work by activatingsecond messenger systemsthat change the internal chemistry of their target cells in complex ways. Alarge number of synapses are dynamically modifiable; that is, they are capable of changing strength in a way that is controlled by the patterns of signals that pass through them. It is widely believed thatactivity-dependent modification of synapsesis the brain’s primary mechanism for learning and memory.

Most of the space in the brain is taken up by axons, which are often bundled together in what are callednerve fiber tracts. A myelinated axon is wrapped in a fatty insulating sheath ofmyelin, which serves to greatly increase the speed of signal propagation. (There are also unmyelinated axons). Myelin is white, making parts of the brain filled exclusively with nerve fibers appear as light-coloredwhite matter, in contrast to the darker-coloredgrey matterthat marks areas with high densities of neuron cell bodies.

Genes, life experiences, and randomness determine how neurons connect.

Also, everything that happens in the brain involves many areas at once (the left brain versus right brain distinction is not strictly accurate). This is part of why the brain is so flexible. There are different ways for the brain to achieve the same result.

EVOLUTION SELECTED THE CONNECTIONS THAT PRODUCE USEFUL BEHAVIOR FOR SURVIVAL AND REPRODUCTION

Bevelin writes:

If certain connections help us interact with our environment, we use them more often than connections that don’t help us. Since we use them more often, they become strengthened.

Evolution has given us preferences that help us classify what is good or bad. When these values are satisfied (causing either pleasure or less pain) through the interaction with our environment, these neural connections are strengthened. These values are reinforced over time because they give humans advantages for survival and reproduction in dealing with their environment.

(Illustration by goce risteski)

If a certain behavior is rewarding, the neural connections associated with that behavior get strengthened. The next time the same situation is encountered, we feel motivated to respond in the way that we’ve learned brings pleasure (or reduces pain). Bevelin:

We do things that we associate with pleasure and avoid things that we associate with pain.

ADAPTIVE BEHAVIOR FOR SURVIVAL AND REPRODUCTION

Bevelin:

The consequences of our actions reinforce certain behavior. If the consequences were rewarding, our behavior is likely to be repeated. What we consider rewarding is individual specific. Rewards can be anything from health, money, job, reputation, family, status, or power. In all of these activities, we do what works. This is how we adapt. The environment selects our future behavior.

Especially in a random environment like the stock market, it can be difficult to figure out what works and what doesn’t. We may make a good decision based on the odds, but get a poor outcome. Or we may make a bad decision based on the odds, but get a good outcome. Only over the course of many decisions can we tell if our investment process is probably working.

Part Two: The Psychology of Misjudgments

Bevelin quotes the Greek philosopher and orator, Dio Chrysostom:

“Why oh why are human beings so hard to teach, but so easy to deceive.”

MISJUDGMENTS EXPLAINED BY PSYCHOLOGY

Bevelin lists 28 reasons for misjudgments and mistakes:

- Bias from mere association–automatically connecting a stimulus with pain or pleasure; including liking or disliking something associated with something bad or good. Includes seeing situations as identical because they seem similar. Also bias from Persian Messenger Syndrome–not wanting to be the carrier of bad news.

- Underestimating the power of incentives (rewards and punishment)–people repeat actions that result in rewards and avoid actions that they are punished for.

- Underestimating bias from own self-interest and incentives.

- Self-serving bias–overly positive view of our abilities and future. Includes over-optimism.

- Self-deception and denial–distortion of reality to reduce pain or increase pleasure. Includes wishful thinking.

- Bias from consistency tendency–being consistent with our prior commitments and ideas even when acting against our best interest or in the face of disconfirming evidence. Includes Confirmation Bias–looking for evidence that confirms our actions and beliefs and ignoring or distorting disconfirming evidence.

- Bias from deprival syndrome–strongly reacting (including desiring and valuing more) when something we like and have (or almost have) is (or threatens to be) taken away or “lost.” Includes desiring and valuing more what we can’t have or what is (or threatens to be) less available.

- Status quo bias and do-nothing syndrome–keeping things the way they are. Includes minimizing effort and a preference for default options.

- Impatience–valuing the present more highly than the future.

- Bias from envy and jealousy.

- Distortion by contrast comparison–judging and perceiving the absolute magnitude of something not by itself but based only on its difference to something else presented closely in time or space or to some earlier adaptation level. Also underestimating the consequences over time of gradual changes.

- The anchoring effect–People tend to use any random number as a baseline for estimating an unknown quantity, despite the fact that the unknown quantity is totally unrelated to the random number. (People also overweigh initial information that is non-quantitative.)

- Over-influence by vivid or the most recent information.

- Omission and abstract blindness–only seeing stimuli we encounter or that grabs our attention, and neglecting important missing information or the abstract. Includes inattentional blindness.

- Bias from reciprocation tendency–repaying in kind what others have done for or to us like favors, concessions, information, and attitudes.

- Bias from over-influence by liking tendency–believing, trusting, and agreeing with people we know and like. Includes bias from over-desire for liking and social acceptance and for avoiding social disapproval. Also bias from disliking–our tendency to avoid and disagree with people we don’t like.

- Bias from over-influence by social proof–imitating the behavior of many others or similar others. Includes crowd folly.

- Bias from over-influence by authority–trusting and obeying a perceived authority or expert.

- The Narrative Fallacy (Bevelin uses the term “Sensemaking”)–constructing explanations that fit an outcome. Includes being too quick in drawing conclusions. Also Hindsight Bias: Thinking events that have happened were more predictable than they were.

- Reason-respecting–complying with requests merely because we’ve been given a reason. Includes underestimating the power in giving people reasons.

- Believing first and doubting later–believing what is not true, especially when distracted.

- Memory limitations–remembering selectively and wrong. Includes influence by suggestions.

- Do-something syndrome–acting without a sensible reason.

- Mental confusion from say-something syndrome–feeling a need to say something when we have nothing to say.

- Emotional arousal–making hasty judgments under the influence of intense emotions. Includes exaggerating the emotional impact of future events.

- Mental confusion from stress.

- Mental confusion from physical or psychological pain, and the influence of chemicals or diseases.

- Bias from over-influence by the combined effect of many psychological tendencies operating together.

PSYCHOLOGICAL REASONS FOR MISTAKES

Bevelin notes that his explanations for the 28 reasons for misjudgments is based on work by Charles Munger, Robert Cialdini, Richard Thaler, Robyn Dawes, Daniel Gilbert, Daniel Kahneman, and Amos Tversky. All are psychologists except for Thaler (economist) and Munger (investor).

1. Mere Association

Bevelin:

Association can influence the immune system. One experiment studied food aversion in mice. Mice got saccharin-flavored water (saccharin has incentive value due to its sweet taste) along with a nausea-producing drug. Would the mice show signs of nausea the next time they got saccharin water alone? Yes, but the mice also developed infections. It was known that the drug in addition to producing nausea, weakened the immune system, but why would saccharin alone have this effect? The mere paring of the saccharin with the drug caused the mouse immune system to learn the association. Therefore, every time the mouse encountered the saccharin, its immune system weakened making the mouse more vulnerable to infections.

If someone brings us bad news, we tend to associate that person with the bad news–and dislike them–even if the person didn’t cause the bad news.

2. Incentives (Reward and Punishment)

Incentives are extremely important. Charlie Munger:

I think I’ve been in the top 5% of my age cohort all my life in understanding the power of incentives, and all my life I’ve underestimated it. Never a year passes that I don’t get some surprise that pushes my limit a little farther.

Munger again:

From all business, my favorite case on incentives is Federal Express. The heart and soul of their system–which creates the integrity of the product–is having all their airplanes come to one place in the middle of the night and shift all the packages from plane to plane. If there are delays, the whole operation can’t deliver a product full of integrity to Federal Express customers. And it was always screwed up. They could never get it done on time. They tried everything–moral suasion, threats, you name it. And nothing worked. Finally, somebody got the idea to pay all these people not so much an hour, but so much a shift–and when it’s all done, they can all go home. Well, their problems cleared up over night.

People can learn the wrong incentives in a random environment like the stock market. A good decision based on the odds may yield a bad result, while a bad decision based on the odds may yield a good result. People tend to become overly optimistic after a success (even if it was good luck) and overly pessimistic after a failure (even if it was bad luck).

3. Self-interest and Incentives

“Never ask the barber if you need a haircut.”

Munger has commented that commissioned sales people, consultants, and lawyers have a tendency to serve the transaction rather than the truth. Many others–including bankers and doctors–are in the same category. Bevelin quotes the American actor Walter Matthau:

“My doctor gave me six months to live. When I told him I couldn’t pay the bill, he gave me six more months.”

If they make unprofitable loans, bankers may be rewarded for many years while the consequences of the bad loans may not occur for a long time.

When designing a system, careful attention must be paid to incentives. Bevelin notes that a new program was put in place in New Orleans: districts that showed improvement in crime statistics would receive rewards, while districts that didn’t faced cutbacks and firings. As a result, in one district, nearly half of all serious crimes were re-classified as minor offences and never fully investigated.

4. Self-serving Tendencies and Overoptimism

We tend to overestimate our abilities and future prospects when we are knowledgeable on a subject, feel in control, or after we’ve been successful.

Bevelin again:

When we fail, we blame external circumstances or bad luck. When others are successful, we tend to credit their success to luck and blame their failures on foolishness. When our investments turn into losers, we had bad luck. When they turn into winners, we are geniuses. This way we draw the wrong conclusions and don’t learn from our mistakes. We also underestimate luck and randomness in outcomes.

5. Self-deception and Denial

Munger likes to quote Demosthenes:

Nothing is easier than self-deceit. For what each man wishes, that he also believes to be true.

People have a strong tendency to believe what they want to believe. People prefer comforting illusions to painful truths.

Richard Feynman:

The first principle is that you must not fool yourself–and you are the easiest person to fool.

6. Consistency

Bevelin:

Once we’ve made a commitment–a promise, a choice, taken a stand, invested time, money, or effort–we want to remain consistent. We want to feel that we’ve made the right decision. And the more we have invested in our behavior the harder it is to change.

The more time, money, effort, and pain we invest in something, the more difficulty we have at recognizing a mistaken commitment. We don’t want to face the prospect of a big mistake.

For instance, as the Vietnam War became more and more a colossal mistake, key leaders found it more and more difficult to recognize the mistake and walk away. The U.S. could have walked away years earlier than it did, which would have saved a great deal of money and thousands of lives.

Bevelin quotes Warren Buffett:

What the human being is best at doing is interpreting all new information so that their prior conclusions remain intact.

Even scientists, whose job is to be as objective as possible, have a hard time changing their minds after they’ve accepted the existing theory for a long time. Physicist Max Planck:

A new scientific truth does not triumph by convincing its opponents and making them see the light, but rather because its opponents eventually die and a new generation grows up that is familiar with it.

7. Deprival Syndrome

Bevelin:

When something we like is (or threatens to be) taken away, we often value it higher. Take away people’s freedom, status, reputation, money, or anything they value, and they get upset… The more we like what is taken away or the larger the commitment we’ve made, the more upset we become. This can create hatreds, revolts, violence, and retaliations.

Fearing deprival, people will be overly conservative or will engage in cover-ups.

A good value investor is wrong roughly 40 percent of the time. However, due to deprival syndrome and loss aversion–the pain of a loss is about 2 to 2.5 times greater than the pleasure of an equivalent gain–investors have a hard time admitting their mistakes and moving on. Admitting a mistake means accepting a loss of money and also recognizing our own fallibility.

Furthermore, deprival syndrome makes us keep trying something if we’ve just experienced a series of near misses. We feel that “we were so close” to getting some reward that we can’t give up now, even if the reward may not be worth the expected cost.

Finally, the harder it is to get something, the more value we tend to place on it.

8. Status Quo and Do-Nothing Syndrome

We feel worse about a harm or loss if it results from our action than if it results from our inaction. We prefer the default option–what is selected automatically unless we change it. However, as Bevelin points out, doing nothing is still a decision and the cost of doing nothing could be greater than the cost of taking an action.

In countries where being an organ donor is the default choice, people strongly prefer to be organ donors. But in countries where not being an organ donor is the default choice, people prefer not to be organ donors. In each case, most people simply go with the default option–the status quo. But society is better off if most people are organ donors.

9. Impatience

We value the present more than the future. We often seek pleasure today at the cost of a potentially better future. It’s important to understand that pain and sacrifice today–if done for the right reasons–can lead to greater happiness in the future.

10. Envy and Jealousy

Charlie Munger and Warren Buffett often point out that envy is a stupid sin because–unlike other sins like gluttony–there’s no upside. Also, jealousy is among the top three motives for murder.

It’s best to set goals and work towards them without comparing ourselves to others. Partly by chance, there are always some people doing better and some people doing worse.

11. Contrast Comparison

The classic demonstration of contrast comparison is to stick one hand in cold water and the other hand in warm water. Then put both hands in a buck with room temperature water. Your cold hand will feel warm while your warm hand will feel cold.

Bevelin writes:

We judge stimuli by differences and changes and not absolute magnitudes. For example, we evaluate stimuli like temperature, loudness, brightness, health, status, or prices based on their contrast or difference from a reference point (the prior or concurrent stimuli or what we have become used to). This reference point changes with new experiences and context.

How we value things depends on what we compare them with.

Salespeople, after selling the main item, often try to sell add-ons, which seem cheap by comparison. If you buy a car for $50,000, then adding an extra $1,000 for leather doesn’t seem like much. If you buy a computer for $1,500, then adding an extra $50 seems inconsequential.

Bevelin observes:

The same thing may appear attractive when compared to less attractive things and unattractive when compared to more attractive things. For example, studies show that a person of average attractiveness is seen as less attractive when compared to more attractive others.

One trick some real estate agents use is to show the client a terrible house at an absurdly high price first, and then show them a merely mediocre house at a somewhat high price. The agent often makes the sale.

Munger has remarked that some people enter into a bad marriage because their previous marriage was terrible. These folks make the mistake of thinking that what is better based on their own limited experience is the same as what is better based on the experience of many different people.

Another issue is that something can gradually get much worse over time, but we don’t notice it because each increment is small. It’s like the frog in water where the water is slowly brought to the boiling point. For instance, the behavior of some people may get worse and worse and worse. But we fail to notice because the change is too gradual.

12. Anchoring

The anchoring effect: People tend to use any random number as a baseline for estimating an unknown quantity, despite the fact that the unknown quantity is totally unrelated to the random number. (People also overweigh initial information that is non-quantitative.)

Daniel Kahneman and Amos Tversky did one experiment where they spun a wheel of fortune, but they had secretly programmed the wheel so that it would stop on 10 or 65. After the wheel stopped, participants were asked to estimate the percentage of African countries in the UN. Participants who saw “10” on the wheel guessed 25% on average, while participants who saw “65” on the wheel guessed 45% on average, ahuge difference.

Behavioral finance expert James Montier has run his own experiment on anchoring. People are asked to write down the last four digits of their phone number. Then they are asked whether the number of doctors in their capital city is higher or lower than the last four digits of their phone number. Results: Those whose last four digits were greater than 7,000 on average report 6,762 doctors, while those with telephone numbers below 2,000 arrived at an average 2,270 doctors. (James Montier, Behavioural Investing, Wiley 2007, page 120)

Those are just two experiments out of many. Theanchoring effectis “one of the most reliable and robust results of experimental psychology,” says Kahneman. Furthermore, Montier observes that the anchoring effect is one reason why people cling to financial forecasts, despite the fact that most financial forecasts are either wrong, useless, or impossible to time.

When faced with the unknown, people will grasp onto almost anything. So it is little wonder that an investor will cling to forecasts, despite their uselessness.

13. Vividness and Recency

Bevelin explains:

The more dramatic, salient, personal, entertaining, or emotional some information, event, or experience is, the more influenced we are. For example, the easier it is to imagine an event, the more likely we are to think that it will happen.

We are easily influenced when we are told stories because we relate to stories better than to logic or fact. We love to be entertained. Information we receive directly, through our eyes or ears has more impact than information that may have more evidential value. A vivid description from a friend or family member is more believable than true evidence. Statistical data is often overlooked. Studies show that jurors are influenced by vivid descriptions. Lawyers try to present dramatic and memorable testimony.

The media capitalizes on negative events–especially if they are vivid–because negative news sells. For instance, even though the odds of being in a plane crash are infinitesimally low–one in 11 million–people become very fearful when a plane crash is reported in the news. Many people continue to think that a car is safer than a plane, but you are over 2,000 times more likely to be in a car crash than a plane crash. (The odds of being in a car crash are one in 5,000.)

14. Omission and Abstract Blindness

We see the available information. We don’t see what isn’t reported. Missing information doesn’t draw our attention. We tend not to think about other possibilities, alternatives, explanations, outcomes, or attributes. When we try to find out if one thing causes another, we only see what happened, not what didn’t happen. We see when a procedure works, not when it doesn’t work. When we use checklists to find out possible reasons for why something doesn’t work, we often don’t see that what is not on the list in the first place may be the reason for the problem.

Often we don’t see things right in front of us if our attention is focused elsewhere.

15. Reciprocation

Munger:

The automatic tendency of humans to reciprocate both favors and disfavors has long been noticed as it is in apes, monkeys, dogs, and many less cognitively gifted animals. The tendency facilitates group cooperation for the benefit of members.

Unfortunately, hostility can get extreme. But we have the ability to train ourselves. Munger:

The standard antidote to one’s overactive hostility is to train oneself to defer reaction. As my smart friend Tom Murphy so frequently says, ‘You can always tell the man off tomorrow, if it is such a good idea.’

Munger then notes that the tendency to reciprocate favor for favor is also very intense. On the whole, Munger argues, the reciprocation tendency is a positive:

Overall, both inside and outside religions, it seems clear to me that Reciprocation Tendency’s constructive contributions to man far outweigh its destructive effects…

And the very best part of human life probably lies in relationships of affection wherein parties are more interested in pleasing than being pleased–a not uncommon outcome in display of reciprocate-favor tendency.

Guilt is also a net positive, asserts Munger:

…To the extent the feeling of guilt has an evolutionary base, I believe the most plausible cause is the mental conflict triggered in one direction by reciprocate-favor tendency and in the opposite direction by reward superresponse tendency pushing one to enjoy one hundred percent of some good thing… And if you, like me… believe that, averaged out, feelings of guilt do more good than harm, you may join in my special gratitude for reciprocate-favor tendency, no matter how unpleasant you find feelings of guilt.

16. Liking and Disliking

Munger:

One very practical consequence of Liking/Loving Tendency is that it acts as a conditioning device that makes the liker or lover tend (1) to ignore faults of, and comply with wishes of, the object of his affection, (2) to favor people, products, and actions merely associated with the object of his affection [this is also due to Bias from Mere Association] and (3) to distort other facts to facilitate love.

We’re naturally biased, so we have to be careful in some situations.

On the other hand, Munger points out that loving admirable persons and ideas can be very beneficial.

…a man who is so constructed that he loves admirable persons and ideas with a special intensity has a huge advantage in life. This blessing came to both Buffett and myself in large measure, sometimes from the same persons and ideas. One common, beneficial example for us both was Warren’s uncle, Fred Buffett, who cheerfully did the endless grocery-store work that Warren and I ended up admiring from a safe distance. Even now, after I have known so many other people, I doubt if it is possible to be a nicer man than Fred Buffett was, and he changed me for the better.

Warren Buffett:

If you tell me who your heroes are, I’ll tell you how you’re gonna turn out. It’s really important in life to have the right heroes. I’ve been very lucky in that I’ve probably had a dozen or so major heroes. And none of them have ever let me down. You want to hang around with people that are better than you are. You will move in the direction of the crowd that you associate with.

Disliking: Munger notes that Switzerland and the United States have clever political arrangements to “channel” the hatreds and dislikings of individuals and groups into nonlethal patterns including elections.

But the dislikings and hatreds never go away completely… And we also get the extreme popularity of very negative political advertising in the United States.

Munger explains:

Disliking/Hating Tendency also acts as a conditioning device that makes the disliker/hater tend to (1) ignore virtues in the object of dislike, (2) dislike people, products, and actions merely associated with the object of dislike, and (3) distort other facts to facilitate hatred.

Distortion of that kind is often so extreme that miscognition is shockingly large. When the World Trade Center was destroyed, many Pakistanis immediately concluded that the Hindus did it, while many Muslims concluded that the Jews did it. Such factual distortions often make mediation between opponents locked in hatred either difficult or impossible. Mediations between Israelis and Palestinians are difficult because facts in one side’s history overlap very little with facts from the other side’s.

17. Social Proof

Munger comments:

The otherwise complex behavior of man is much simplified when he automatically thinks and does what he observes to be thought and done around him. And such followership often works fine…

Psychology professors love Social-Proof Tendency because in their experiments it causes ridiculous results. For instance, if a professor arranges for some stranger to enter an elevator wherein ten ‘compliance practitioners’ are all standing so that they face the rear of the elevator, the stranger will often turn around and do the same.

Of course, like the other tendencies, Social Proof has an evolutionary basis. If the crowd was running in one direction, typically your best response was to follow.

But, in today’s world, simply copying others often doesn’t make sense. Munger:

And in the highest reaches of business, it is not at all uncommon to find leaders who display followership akin to that of teenagers. If one oil company foolishly buys a mine, other oil companies often quickly join in buying mines. So also if the purchased company makes fertilizer. Both of these oil company buying fads actually bloomed, with bad results.

Of course, it is difficult to identify and correctly weigh all the possible ways to deploy the cash flow of an oil company. So oil company executives, like everyone else, have made many bad decisions that were triggered by discomfort from doubt. Going along with social proof provided by the action of other oil companies ends this discomfort in a natural way.

Munger points out that Social Proof can sometimes be constructive:

Because both bad and good behavior are made contagious by Social-Proof Tendency, it is highly important that human societies (1) stop any bad behavior before it spreads and (2) foster and display all good behavior.

It’s vital for investors to be able to think independently. As Ben Graham says:

You are neither right nor wrong because the crowd disagrees with you. You are right because your data and reasoning are right.

18. Authority

A disturbingly significant portion of copilots will not correct obvious errors made by the pilot during simulation exercises. There are also real world examples of copilots crashing planes because they followed the pilot mindlessly. Munger states:

…Such cases are also given attention in the simulator training of copilots who have to learn to ignore certain really foolish orders from boss pilots because boss pilots will sometimes err disastrously. Even after going through such a training regime, however, copilots in simulator exercises will too often allow the simulated plane to crash because of some extreme and perfectly obvious simulated error of the chief pilot.

Psychologist Stanley Milgram wanted to understand why so many seemingly normal and decent people engaged in horrific, unspeakable acts during World War II. Munger:

[Milgram] decided to do an experiment to determine exactly how far authority figures could lead ordinary people into gross misbehavior. In this experiment, a man posing as an authority figure, namely a professor governing a respectable experiment, was able to trick a great many ordinary people into giving what they had every reason to believe were massive electric shocks that inflicted heavy torture on innocent fellow citizens…

Almost any intelligent person with my checklist of psychological tendencies in his hand would, by simply going down the checklist, have seen that Milgram’s experiment involved about six powerful psychological tendencies acting in confluence to bring about his extreme experimental result. For instance, the person pushing Milgram’s shock lever was given much social proof from presence of inactive bystanders whose silence communicated that his behavior was okay…

Bevelin quotes the British novelist and scientist Charles Percy Snow:

When you think of the long and gloomy history of man, you will find more hideous crimes have been committed in the name of obedience than have ever been committed in the name of rebellion.

19. The Narrative Fallacy (Sensemaking)

(Bevelin uses the term “sensemaking,” but “narrative fallacy” is better, in my view.) InThe BlackSwan, Nassim Taleb writes the following about thenarrative fallacy:

The narrative fallacy addresses our limited ability to look at sequences of facts without weaving an explanation into them, or, equivalently, forcing a logical link, anarrow of relationship, upon them. Explanations bind facts together. They make them all the more easily remembered; they help themmake more sense. Where this propensity can go wrong is when it increases ourimpressionof understanding.

Thenarrative fallacyis central to many of the biases and misjudgments mentioned by Charlie Munger. (In his great book,Thinking, Fast and Slow, Daniel Kahneman discusses the narrative fallacy as a central cognitive bias.)The human brain, whether using System 1 (intuition) or System 2 (logic), always looks for or creates logical coherence among random data. Often System 1 is right when it assumes causality; thus, System 1 is generally helpful, thanks to evolution. Furthermore, System 2, by searching for underlying causes or coherence, has, through careful application of the scientific method over centuries, developed a highly useful set of scientific laws by which to explain and predict various phenomena.

The trouble comes when the data or phenomena in question are highly random–or inherently unpredictable (at least for the time being). In these areas, System 1 makes predictions that are often very wrong. And even System 2 assumes necessary logical connections when there may not be any–at least, none that can be discovered for some time.

Note: The eighteenth century Scottish philosopher (and psychologist) David Hume was one of the first to clearly recognize the human brain’s insistence on always assuming necessary logical connections in any set of data or phenomena.

If our goal is to explain certain phenomena scientifically, then we have to develop a testable hypothesis about what will happen (or what will happen with probability x) under specific, relevant conditions. If our hypothesis can’t accurately predict what will happen under specific, relevant conditions, then our hypothesis is not a valid scientific explanation.

20. Reason-respecting

We are more likely to comply with a request if people give us a reason–even if we don’t understand the reason or if it’s wrong. In one experiment, a person approaches people standing in line waiting to use a copy machine and says, “Excuse me, I have 5 pages. May I use the Xerox machine because I have to make some copies?” Nearly everyone agreed.

Bevelin notes that often the word “because” is enough to convince someone, even if no actual reason is given.

21. Believe First and Doubt Later

We are not natural skeptics. We find it easy to believe but difficult to doubt. Doubting is active and takes effort.

Bevelin continues:

Studies show that in order to understand some information, we must first accept it as true… We first believe all information we understand and only afterwards and with effort do we evaluate, and if necessary, un-believe it.

Distraction, fatigue, and stress tend to make us less likely to think things through and more likely to believe something that we normally might doubt.

When it comes to detecting lies, many (if not most) people are only slightly better than chance. Bevelin quotes Michel de Montaigne:

If falsehood, like truth, had only one face, we would be in better shape. For we would take as certain the opposite of what the liar said. But the reverse of truth has a hundred thousand shapes and a limitless field.

22. Memory Limitations

Bevelin:

Our memory is selective. We remember certain things and distort or forget others. Every time we recall an event, we reconstruct our memories. We only remember fragments of our real past experiences. Fragments influenced by what we have learned, our experiences, beliefs, mood, expectations, stress, and biases.

We remember things that are dramatic, fearful, emotional, or vivid. But when it comes to learning in general–as opposed to remembering–we learn better when we’re in a positive mood.

Human memory is flawed to the point that eyewitness identification evidence has been a significant cause of wrongful convictions. Moreover, leading and suggestive questions can cause misidentification. Bevelin:

Studies show that it is easy to get a witness to believe they saw something when they didn’t. Merely let some time pass between their observation and the questioning. Then give them false or emotional information about the event.

23. Do-something Syndrome

Activity is not the same thing as results. Most people feel impelled by boredom or hubris to be active. But many things are not worth doing.

If we’re long-term investors, then nearly all of the time the best thing for us to do is nothing at all (other than learn). This is especially true if we’re tired, stressed, or emotional.

24. Say-something Syndrome

Many people have a hard time either saying nothing or saying, “I don’t know.” But it’s better for us to say nothing if we have nothing to say. It’s better to admit “I don’t know” rather than pretend to know.

25. Emotions

Bevelin writes:

We saw under loss aversion and deprival that we put a higher value on things we already own than on the same things if we don’t own them. Sadness reverses this effect, making us willing to accept less money to sell something than we would pay to buy it.

It’s also worth repeating: If we feel emotional, it’s best to defer important decisions whenever possible.

26. Stress

A study showed that business executives who are committed to their work and who have a positive attitude towards challenges–viewing them as opportunities for growth–do not get sick from stress. Business executives who lack such commitment or who lack a positive attitude towards challenges are more likely to get sick from stress.

Stress itself is essential to life. We need challenges. What harms us is not stress but distress–unnecessary anxiety and unhelpful trains of thought. Bevelin quotes the stoic philosopher Epictetus:

Happiness and freedom begin with a clear understanding of one principle: Some things are within our control, and some things are not. It is only after you have faced up to this fundamental rule and learned to distinguish between what you can and can’t control that inner tranquility and outer effectiveness become possible.

27. Pain and Chemicals

People struggle to think clearly when they are in pain or when they’re drunk or high.

Munger argues that if we want to live a good life, first we should list the things that can ruin a life. Alcohol and drugs are near the top of the list. Self-pity and a poor mental attitude will also be on that list. We can’t control everything that happens, but we can always control our mental attitude. As the Austrian psychiatrist and Holocaust survivor Viktor Frankl said:

Everything can be taken from a man but one thing: the last of the human freedoms–to choose one’s attitude in any given set of circumstances, to choose one’s own way.

28. Multiple Tendencies

Often multiple psychological tendencies operate at the same time. Bevelin gives an example where the CEO makes a decision and expects the board of directors to go along without any real questions. Bevelin explains:

Apart from incentive-caused bias, liking, and social approval, what are some other tendencies that operate here? Authority–the CEO is the authority figure whom directors tend to trust and obey. He may also make it difficult for those who question him. Social proof–the CEO is doing dumb things but no one else is objecting so all directors collectively stay quiet–silence equals consent; illusions of the group as invulnerable and group pressure (loyalty) may also contribute. Reciprocation–unwelcome information is withheld since the CEO is raising the director fees, giving them perks, taking them on trips or letting them use the corporate jet. Association and Persian Messenger Syndrome–a single director doesn’t want to be the carrier of bad news. Self-serving tendencies and optimism–feelings of confidence and optimism: many boards also select new directors who are much like themselves; that share similar ideological viewpoints. Deprival–directors don’t want to lose income and status. Respecting reasons no matter how illogical–the CEO gives them reasons. Believing first and doubting later–believing what the CEO says even if not true, especially when distracted. Consistency–directors want to be consistent with earlier decisions–dumb or not.

Part Three: The Physics and Mathematics of Misjudgments

SYSTEMS THINKING

- Failing to consider that actions have both intended and unintended consequences. Includes failing to consider secondary and higher order consequences and inevitable implications.

- Failing to consider the whole system in which actions and reactions take place, the important factors that make up the system, their relationships and effects of changes on system outcome.

- Failing to consider the likely reaction of others–what is best to do may depend on what others do.

- Failing to consider the implications of winning a bid–overestimating value and paying too much.

- Overestimating predictive ability or using unknowable factors in making predictions.

SCALE AND LIMITS

- Failing to consider that changes in size or time influence form, function, and behavior.

- Failing to consider breakpoints, critical thresholds, or limits.

- Failing to consider constraints–that a system’s performance is constrained by its weakest link.

CAUSES

- Not understanding what causes desired results.

- Believing cause resembles its effect–that a big effect must have a big or complicated cause.

- Underestimating the influence of randomness in bad or good outcomes.

- Mistaking an effect for its cause. Includes failing to consider that many effects may originate from one common root cause.

- Attributing outcome to a single cause when there are multiple causes.

- Mistaking correlation for cause.

- Failing to consider that an outcome may be consistent with alternative explanations.

- Drawing conclusions about causes from selective data. Includes identifying the wrong cause because it seems the obvious one based on a single observed effect. Also failing to consider information or evidence that is missing.

- Not comparing the difference in conditions, behavior, and factors between negative and positive outcomes in similar situations when explaining an outcome.

NUMBERS AND THEIR MEANING

- Looking at isolated numbers–failing to consider relationships and magnitudes. Includes not using basic math to count and quantify. Also not differentiating between relative and absolute risk.

- Underestimating the effect of exponential growth.

- Underestimating the time value of money.

PROBABILITIES AND NUMBER OF POSSIBLE OUTCOMES

- Underestimating risk exposure in situations where relative frequency (or comparable data) and/or magnitude of consequences is unknown or changing over time.

- Underestimating the number of possible outcomes for unwanted events. Includes underestimating the probability and severity of rate or extreme events.

- Overestimating the chance of rare but widely publicized and highly emotional events and underestimating the chance of common but less publicized events.

- Failing to consider both probabilities and consequences (expected value).

- Believing events where chance plays a role are self-correcting–that previous outcomes of independent events have predictive value in determining future outcomes.

- Believing one can control the outcome of events where chance is involved.

- Judging financial decisions by evaluating gains and losses instead of final state of wealth and personal value.

- Failing to consider the consequences of being wrong.

SCENARIOS

- Overestimating the probability of scenarios where all of a series of steps must be achieved for a wanted outcome. Also underestimating opportunities for failure and what normally happens in similar situations.

- Underestimating the probability of systems failure–scenarios composed of many parts where system failure can happen one way or another. Includes failing to consider that time horizon changes probabilities. Also assuming independence when it is not present and/or assuming events are equally likely when they are not.

- Not adding a factor of safety for known and unknown risks. Size of factor depends on the consequences of failure, how well the risks are understood, systems characteristics, and degree of control.

COINCIDENCES AND MIRACLES

- Underestimating that surprises and improbable events happen, somewhere, sometime, to someone, if they have enough opportunities (large enough size or time) to happen.

- Looking for meaning, searching for causes, and making up patterns for chance events, especially events that have emotional implications.

- Failing to consider cases involving the absence of a cause or effect.

RELIABILITY OF CASE EVIDENCE

- Overweighing individual case evidence and under-weighing the prior probability (probability estimate of an event before considering new evidence that might change it) considering for example, the base rate (relative frequency of an attribute or event in a representative comparison group), or evidence from many similar cases. Includes failing to consider the probability of a random match, and the probability of a false positive and a false negative. Also failing to consider a relevant comparison population that bears the characteristic we are seeking.

MISREPRESENTATIVE EVIDENCE

- Failing to consider changes in factors, context, or conditions when using past evidence to predict likely future outcomes. Includes not searching for explanations to why a past outcome happened, what is required to make the past record continue, and what forces can change it.

- Overestimating evidence from a single case or small or unrepresentative samples.

- Underestimating the influence of chance in performance (success and failure)

- Only seeing positive outcomes–paying little or no attention to negative outcomes and prior probabilities.

- Failing to consider variability of outcomes and their frequency.

- Failing to consider regression–in any series of events where chance is involved, unique outcomes tend to regress back to the average outcome.

Part Four: Guidelines to Better Thinking

Bevelin explains: “The purpose of this part is to explore tools that provide a foundation for rational thinking. Ideas that help us when achieving goals, explaining ‘why,’ preventing and reducing mistakes, solving problems, and evaluating statements.”

Bevelin lists 12 tools that he discusses:

- Models of reality

- Meaning

- Simplification

- Rules and filters

- Goals

- Alternatives

- Consequences

- Quantification

- Evidence

- Backward thinking

- Risk

- Attitudes

MODELS OF REALITY

Bevelin:

A model is an idea that helps us better understand how the world works. Models illustrate consequences and answer questions like ‘why’ and ‘how.’ Take the model of social proof as an example. What happens? When people are uncertain they often automatically do what others do without thinking about the correct thing to do. This idea helps explain ‘why’ and predict ‘how’ people are likely to behave in certain situations.

Bevelin continues:

Ask: What is the underlying big idea? Do I understand its application in practical life? Does it help me understand the world? How does it work? Why does it work? Under what conditions does it work? How reliable is it? What are its limitations? How does it relate to other models?

What models are most reliable? Bevelin quotes Munger:

“The models that come from hard science and engineering are the most reliable models on this Earth. And engineering quality control–at least the guts of it that matters to you and me and people who are not professional engineers–is very much based on the elementary mathematics of Fermat and Pascal: It costs so much and you get so much less likelihood of it breaking if you spend this much…

And, of course, the engineering idea of a backup system is a very powerful idea. The engineering idea of breakpoints–that’s a very powerful model, too. The notion of a critical mass–that comes out of physics–is a very powerful model.”

Bevelin adds:

A valuable model produces meaningful explanations and predictions of likely future consequences where the cost of being wrong is high.

A model should be easy to use. If it is complicated, we don’t use it.

It is useful on a nearly daily basis. If it is not used, we forget it.

Bevelin asks what can help us to see the big picture. Bevelin quotes Munger again:

“In most messy human problems, you have to be able to useall the big ideas and not just a few of them.”

Bevelin notes that physics does not explain everything, and neither does economics. In business, writes Bevelin, it is useful to know how scale changes behavior, how systems may break, how supply influences prices, and how incentives cause behavior.

It’s also crucial to know how different ideas interact and combine. Munger again:

“You getlollapalooza effects when two, three, or four forces are all operating in the same direction. And, frequently, you don’t get simple addition. It’s often like a critical mass in physics where you get a nuclearexplosion if you get to a certain point of mass–and you don’t get anything much worth seeing if you don’t reach the mass.

Sometimes the forces just add like ordinary quantities and sometimes they combine on a break-point or critical-mass basis… More commonly, the forces coming out of models areconflicting to some extent… So you [must] have themodels and you [must] see therelatedness and the effects from the relatedness.”

MEANING

Bevelin writes:

Understanding ‘meaning’ requires that we observe and ask basic questions. Examples of some questions are:

-

- Meaning of words: What do the words mean? What do they imply? Do they mean anything? Can we translate words, ideas, or statements into an ordinary situation that tells us something? An expression is always relative. We have to judge and measure it against something.

- Meaning of an event: What effect is produced? What is really happening using ordinary words? What is it doing? What is accomplished? Under what conditions does it happen? What else does it mean?

- Causes: What is happening here and why? Is this working? Why or why not? Why did that happen? Why does it work here but not there? How can it happen? What are the mechanisms behind? What makes it happen?

- Implications: What is the consequence of this observation, event, or experience? What does that imply?

- Purpose: Why should we do that? Why do I want this to happen?

- Reason: Why is this better than that?

- Usefulness: What is the applicability of this? Does it mean anything in relation to what I want to achieve?

Turning to the field of investing, how do we decide how much to pay for a business? Buying stock is buying a fractional share of a business. Bevelin quotes Warren Buffett:

What you’re trying to do is to look at all the cash a business will produce between now and judgment day and discount it back to the present using an appropriate discount rate and buy a lot cheaper than that. Whether the money comes from a bank, an Internet company, a brick company… the money all spends the same. Why pay more for a telecom business than a brick business? Money doesn’t know where it comes from. There’s no sense in paying more for a glamorous business if you’re getting the same amount of money, but paying more for it. It’s the same money that you can get from a brick company at a lower cost. The question is what are the economic characteristics of the bank, the Internet company, or the brick company. That’s going to tell you how much cash they generate over long periods in the future.

SIMPLIFICATION

Bevelin quotes Munger:

We have a passion for keeping things simple.

Bevelin then quotes Buffett:

We haven’t succeeded because we have some great, complicated systems or magic formulas we apply or anything of the sort. What we have is just simplicity itself.

Munger again:

If something is too hard, we move on to something else. What could be more simple than that?

Munger:

There are things that we stay away from. We’re like the man who said he had three baskets on his desk: in, out, and too tough. We have such baskets–mental baskets–in our office. An awful lot of stuff goes in the ‘too tough’ basket.

Buffett on how he and Charlie Munger do it:

Easy does it. After 25 years of buying and supervising a great variety of businesses, Charlie and I have not learned how to solve difficult business problems. What we have learned is to avoid them. To the extent we have been successful, it is because we concentrated on identifying one-foot hurdles that we could step over rather than because we acquired any ability to clear seven-footers. The finding may seem unfair, but in both business and investments it is usually far more profitable to simply stick with the easy and obvious than it is to resolve the difficult.

It’s essential that management maintain focus. Buffett:

A… serious problem occurs when the management of a great company gets sidetracked and neglects its wonderful base business while purchasing other businesses that are so-so or worse… (Would you believe that a few decades back they wee growing shrimp at Coke and exploring for oil at Gillette?) Loss of focus is what most worries Charlie and me when we contemplate investing in businesses that in general look outstanding. All too often, we’ve seen value stagnate in the presence of hubris or of boredom that caused the attention of managers to wander.

For an investor considering an investment, it’s crucial to identify what is knowable and what is important. Buffett:

There are two questions you ask yourself as you look at the decision you’ll make. A) is it knowable? B) is it important? If it is not knowable, as you know there are all kinds of things that are important but not knowable, we forget about those. And if it’s unimportant, whether it’s knowable or not, it won’t make any difference. We don’t care.

RULES AND FILTERS

Bevelin writes:

When we know what we want, we need criteria to evaluate alternatives. Ask: What are the most critical (and knowable) factors that will cause what I want to achieve or avoid? Criteria must be based on evidence and be reasonably predictive… Try to use as few criteria as necessary to make your judgment. Then rank them in order of their importance and use them as filters. Set decision thresholds in a way that minimizes the likelihood of false alarms and misses (in investing, choosing a bad investment or missing a good investment). Consider the consequences of being wrong.

Bear in mind that in many fields, a relatively simple statistical prediction rule based on a few key variables will perform better than experts over time. See: https://boolefund.com/simple-quant-models-beat-experts-in-a-wide-variety-of-areas/

Bevelin gives as an example the following: a man is rushed to the hospital while having a heart attack. Is it high-risk or low-risk? If the patient’s minimum systolic blood pressure over the initial 24-hour period is less than 91, then it’s high-risk. If not, then the next question is age. If the patient is over 62.5 years old, then if he displays sinus tachycardia, he is high-risk. It turns out that this simple model–developed by Statistics Professor Leo Breiman and colleagues at the University of California, Berkeley–works better than more complex models and also than experts.

In making an investment decision, Buffett has said that he uses a 4-step filter:

-

- Can I understand it?

- Does it look like it has some kind of sustainable competitive advantage?

- Is the management composed of able and honest people?

- Is the price right?

If a potential investment passes all four filters, then Buffett writes a check. By “understanding,” Buffett means having a “reasonable probability” of assessing whether the business will be in 10 years.

GOALS

Bevelin puts forth:

Always ask: What end result do I want? What causes that? What factors have a major impact on the outcome? What single factor has the most impact? Do I have the variable(s) needed for the goal to be achieved? What is the best way to achieve my goal? Have I considered what other effects my actions will have that will influence the final outcome?

When we solve problems and know what we want to achieve, we need to prioritize or focus on the right problems. What should we do first? Ask: How serious are the problems? Are they fixable? What is the most important problem? Are the assumptions behind them correct? Did we consider the interconnectedness of the problems? The long-term consequences?

ALTERNATIVES

Bevelin writes:

Choices have costs. Even understanding has an opportunity cost. If we understand one thing well, we may understand other things better. The cost of using a limited resource like time, effort, and money for a specific purpose, can be measured as the value or opportunity lost by not using it in its best available alternative use…

Bevelin considers a business:

Should TransCorp take the time, money, and talent to build a market presence in Montana? The real cost of doing that is the value of the time, money, and talent used in its best alternative use. Maybe increasing their presence in a state where they already have a market share is creating more value. Sometimes it is more profitable to marginally increase a cost where a company already has an infrastructure. Where to they marginally get the most leverage on resources spent? Always ask: What is the change of value of taking a specific action? Where is it best to invest resources from a value point of view?

CONSEQUENCES

Bevelin writes:

Whenever we install a policy, take an action, or evaluate statements, we must trace the consequences. When doing so, we must remember four key things:

- Pay attention to the whole system. Direct and indirect effects,

- Consequences have implications or more consequences, some which may be unwanted. We can’t estimate all possible consequences but there is at least one unwanted consequence we should look out for,

- Consider the effects of feedback, time, scale, repetition, critical thresholds and limits,

- Different alternatives have different consequences in terms of costs and benefits. Estimate the net effects over time and how desirable these are compared to what we want to achieve.

We should heed Buffett’s advice: Whenever someone makes an assertion in economics, always ask, “And then what?” Very often, particularly in economics, it’s the consequences of the consequences that matter.

BOOLE MICROCAP FUND

An equal weighted group of micro caps generally far outperforms an equal weighted (or cap-weighted) group of larger stocks over time. See the historical chart here: https://boolefund.com/best-performers-microcap-stocks/

This outperformance increases significantly by focusing on cheap micro caps. Performance can be further boosted by isolating cheap microcap companies that show improving fundamentals. We rank microcap stocks based on these and similar criteria.

There are roughly 10-20 positions in the portfolio. The size of each position is determined by its rank. Typically the largest position is 15-20% (at cost), while the average position is 8-10% (at cost). Positions are held for 3 to 5 years unless a stock approachesintrinsic value sooner or an error has been discovered.

The mission of the Boole Fund is to outperform the S&P 500 Index by at least 5% per year (net of fees) over 5-year periods. We also aim to outpace the Russell Microcap Index by at least 2% per year (net). The Boole Fund has low fees.

If you are interested in finding out more, please e-mail me or leave a comment.

My e-mail: jb@boolefund.com

Disclosures: Past performance is not a guarantee or a reliable indicator of future results. All investments contain risk and may lose value. This material is distributed for informational purposes only. Forecasts, estimates, and certain information contained herein should not be considered as investment advice or a recommendation of any particular security, strategy or investment product. Information contained herein has been obtained from sources believed to be reliable, but not guaranteed.No part of this article may be reproduced in any form, or referred to in any other publication, without express written permission of Boole Capital, LLC

world is changing