September 25, 2022

Nassim Nicholas Taleb is the author of several books, including Fooled by Randomness, The Black Swan, and Antifragile. I wrote about Fooled by Randomness here: https://boolefund.com/fooled-by-randomness/

Today’s blog post is a summary of Taleb’s The Black Swan. If you’re an investor, or if you have any interest in predictions or in history, then this is a MUST-READ book. One of Taleb’s main points is that Black Swans, which are unpredictable, can be either positive or negative. It’s crucial to try to be prepared for negative Black Swans and to try to benefit from positive Black Swans. However, many measurements of risk in finance assume a statistical distribution that is normal when they should assume a distribution that is fat-tailed. These standard measures of risk won’t prepare you for a Black Swan.

That said, Taleb is an option trader, whereas I am a value investor. For me, if you buy a stock far below liquidation value, then usually you have a margin of safety. A group of such stocks will outperform the market over time while carrying low risk. Furthermore, if you’re a long-term investor, then you can either adopt a value investing approach or you can simply invest in low-cost index funds. Either way, given a long enough period of time, you should get good results. The market has recovered from every crash and has eventually gone on to new highs. Yet Taleb misses this point.

Nonetheless, although Taleb overlooks value investing and index funds, his views on predictions and on history are very insightful and should be studied by every thinking person.

Here’s the outline:

-

- Prologue

PART ONE: UMBERTO ECO’S ANTILIBRARY, OR HOW WE SEEK VALIDATION

-

- Chapter 1: The Apprenticeship of an Empirical Skeptic

- Chapter 2: Yevgenia’s Black Swan

- Chapter 3: The Speculator and the Prostitute

- Chapter 4: One Thousand and One Days, or How Not to Be a Sucker

- Chapter 5: Confirmation Schmonfirmation!

- Chapter 6: The Narrative Fallacy

- Chapter 7: Living in the Antechamber of Hope

- Chapter 8: Giacomo Casanova’s Unfailing Luck: The Problem of Silent Evidence

- Chapter 9: The Ludic Fallacy, or the Uncertainty of the Nerd

PART TWO: WE JUST CAN’T PREDICT

-

- Chapter 10: The Scandal of Prediction

- Chapter 11: How to Look for Bird Poop

- Chapter 12: Epistemocracy, a Dream

- Chapter 13: Apelles the Painter, or What Do You Do if You Cannot Predict?

PART THREE: THOSE GRAY SWANS OF EXTREMISTAN

-

- Chapter 14: From Mediocristan to Extremistan, and Back

- Chapter 15: The Bell Curve, That Great Intellectual Fraud

- Chapter 16: The Aesthetics of Randomness

- Chapter 17: Locke’s Madmen, or Bell Curves in the Wrong Places

- Chapter 18: The Uncertainty of the Phony

PART FOUR: THE END

-

- Chapter 19: Half and Half, or How to Get Even with the Black Swan

PROLOGUE

Taleb writes:

Before the discovery of Australia, people in the Old World were convinced that all swans were white, an unassailable belief as it seemed completely confirmed by empirical evidence… It illustrates a severe limitation to our learning from observations or experience and the fragility of our knowledge. One single observation can invalidate a general statement derived from millenia of confirmatory sightings of millions of white swans.

Taleb defines a black swan as having three attributes:

-

- First, it is an outlier, as it lies outside the realm of regular expectations, because nothing in the past can convincingly point to its possibility.

- Second, it carries an extreme impact.

- Third, in spite of its outlier status, human nature makes us concoct explanations for its occurrence after the fact, making it explainable and predictable.

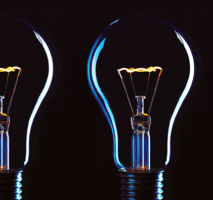

Taleb notes that the effect of Black Swans has been increasing in recent centuries. Furthermore, social scientists still assume that risks can be modeled using the normal distribution, i.e., the bell curve. Social scientists have not incorporated “Fat Tails” into their assumptions about risk. (A fat-tailed statistical distribution, as compared to a normal distribution, carries higher probabilities for extreme outliers.)

(Illustration by Peter Hermes Furian: The red curve is a normal distribution, whereas the orange curve has fat tails.)

Taleb continues:

Black Swan logic makes what you don’t know far more relevant than what you do know. Consider that many Black Swans can be caused and exacerbated by their being unexpected.

Taleb mentions the Sept. 11, 2001 terrorist attack on the twin towers. If such an attack had been expected, then it would have been prevented. Taleb:

Isn’t it strange to see an event happening precisely because it was not supposed to happen? What kind of defense do we have against that? … It may be odd that, in such a strategic game, what you know can be truly inconsequential.

Taleb argues that Black Swan logic applies to many areas in business and also to scientific theories. Taleb makes a general point about history:

The inability to predict outliers implies the inability to predict the course of history, given the share of these events in the dynamics of events.

Indeed, people, especially experts, have a terrible record in forecasting political and economic events. Taleb advises:

Black Swans being unpredictable, we need to adjust to their existence (rather than naively try to predict them). There are so many things we can do if we focus on antiknowledge, or what we do not know. Among many other benefits, you can set yourself up to collect serendipitous Black Swans (of the positive kind) by maximizing your exposure to them. Indeed, in some domains””such as scientific discovery and venture capital investments””there is a disproportionate payoff from the unknown, since you typically have little to lose and plenty to gain from a rare event… The strategy is, then, to tinker as much as possible and try to collect as many Black Swan opportunities as you can.

Taleb introduces the terms Platonicity and the Platonic fold:

Platonicity is what makes us think that we understand more than we actually do. But this does not happen everywhere. I am not saying that Platonic forms don’t exist. Models and constructions, these intellectual maps of reality, are not always wrong; they are wrong only in some specific applications. The difficulty is that a) you do not know before hand (only after the fact) where the map will be wrong, and b) the mistakes can lead to severe consequences…

The Platonic fold is the explosive boundary where the Platonic mindset enters in contact with messy reality, where the gap between what you know and what you think you know becomes dangerously wide. It is here that the Black Swan is produced.

PART ONE: UMBERTO ECO’S ANTILIBRARY, OR HOW WE SEEK VALIDATION

Umberto Eco’s personal library contains thirty thousand books. But what’s important are the books he has not yet read. Taleb:

Read books are far less valuable than unread books. The library should contain as much of what you do not know as your financial means, mortgage rates, and the currently tight real-estate market allow you to put there… Indeed, the more you know, the larger the rows of unread books. Let us call this collection of unread books an antilibrary.

Taleb adds:

Let us call an antischolar””someone who focuses on the unread books, and makes an attempt not to treat his knowledge as a treasure, or even a possession, or even a self-esteem enhancement device””a skeptical empiricist.

(Photo by Pp1)

CHAPTER 1: THE APPRENTICESHIP OF AN EMPIRICAL SKEPTIC

Taleb says his family is from “the Greco-Syrian community, the last Byzantine outpost in northern Syria, which included what is now called Lebanon.” Taleb writes:

People felt connected to everything they felt was worth connecting to; the place was exceedingly open to the world, with a vastly sophisticated lifestyle, a prosperous economy, and temperate weather just like California, with snow-covered mountains jutting above the Mediterranean. It attracted a collection of spies (both Soviet and Western), prostitutes (blondes), writers, poets, drug dealers, adventurers, compulsive gamblers, tennis players, apres-skiers, and merchants””all professions that complement one another.

Taleb writes about when he was a teenager. He was a “rebellious idealist” with an “ascetic taste.” Taleb:

As a teenager, I could not wait to go settle in a metropolis with fewer James Bond types around. Yet I recall something that felt special in the intellectual air. I attended the French lycee that had one of the highest success rates for the French baccalaureat (the high school degree), even in the subject of the French language. French was spoken there with some purity: as in prerevolutionary Russia, the Levantine Christian and Jewish patrician class (from Istanbul to Alexandria) spoke and wrote formal French as a language of distinction. The most privileged were sent to school in France, as both my grandfathers were… Two thousand years earlier, by the same instinct of linguistic distinction, the snobbish Levantine patricians wrote in Greek, not the vernacular Aramaic… And, after Hellenism declined, they took up Arabic. So in addition to being called a “paradise,” the place was also said to be a miraculous crossroads of what are superficially tagged “Eastern” and “Western” cultures.

Then a Black Swan hit:

The Lebanese “paradise” suddenly evaporated, after a few bullets and mortar shells… after close to thirteen centuries of remarkable ethnic coexistence, a Black Swan, coming out of nowhere, transformed the place from heaven to hell. A fierce civil war began between Christians and Moslems, including the Palastinian refugees who took the Moslem side. It was brutal, since the combat zones were in the center of town and most of the fighting took place in residential areas (my high school was only a few hundred feet from the war zone). The conflict lasted more than a decade and a half.

Taleb makes a general point about history:

The human mind suffers from three ailments as it comes into contact with history, what I call the triplet of opacity. They are:

-

- the illusion of understanding, or how everyone thinks he knows what is going on in a world that is more complicated (or random) than they realize;

- the retrospective distortion, or how we can assess matters only after the fact, as if they were in a rearview mirror (history seems clearer and more organized in history books than in empirical reality); and

- the overvaluation of factual information and the handicap of authoritative and learned people, particularly when they create categories””when they “Platonify.”

Taleb points out that a diary is a good way to record events as they are happening. This can help later to put events in their context.

Taleb writes about the danger of oversimplification:

Any reduction of the world around us can have explosive consequences since it rules out some sources of uncertainty; it drives us to a misunderstanding of the fabric of the world. For instance, you may think that radical Islam (and its values) are your allies against the threat of Communism, and so you may help them develop, until they send two planes into downtown Manhattan.

CHAPTER 2: YEVGENIA’S BLACK SWAN

Taleb:

Five years ago, Yevgenia Nikolayevna Krasnova was an obscure and unpublished novelist, with an unusual background. She was a neuroscientist with an interest in philosophy (her first three husbands had been philosophers), and she got it into her stubborn Franco-Russian head to express her research and ideas in literary form.

Most publishers largely ignored Yevgenia. Publishers who did look at Yevnegia’s book were confused because she couldn’t seem to answer the most basic questions. “Is this fiction or nonfiction?” “Who is this book written for?” (Five years ago, Yevgenia attended a famous writing workshop. The instructor told her that her case was hopeless.)

Eventually the owner of a small unknown publishing house agreed to publish Yevgenia’s book. Taleb:

It took five years for Yevnegia to graduate from the “egomaniac without anything to justify it, stubborn and difficult to deal with” category to “persevering, resolute, painstaking, and fiercely independent.” For her book slowly caught fire, becoming one of the great and strange successes in literary history, selling millions of copies and drawing so-called critical acclaim…

Yevgenia’s book is a Black Swan.

CHAPTER 3: THE SPECULATOR AND THE PROSTITUTE

Taleb introduces Mediocristan and Extremistan:

| Mediocristan | Extremistan |

| Nonscalable | Scalable |

| Mild or type 1 randomness | Wild (even superwild) type 2 randomness |

| The most typical member is mediocre | The most “typical” is either giant or dwarf, i.e., there is no typical member |

| Winners get a small segment of the total pie | Winner-take-almost-all effects |

| Example: Audience of an opera singer before the gramophone | Today’s audience for an artist |

| More likely to be found in our ancestral environment | More likely to be found in our modern environment |

| Impervious to the Black Swan | Vulnerable to the Black Swan |

| Subject to gravity | There are no physical constraints on what a number can be |

| Corresponds (generally) to physical quantities, i.e., height | Corresponds to numbers, say, wealth |

| As close to utopian equality as reality can spontaneously deliver | Dominated by extreme winner-take-all inequality |

| Total is not determined by a single instance or observation | Total will be determined by a small number of extreme events |

| When you observe for a while you can get to know what’s going on | It takes a long time to get to know what’s going on |

| Tyranny of the collective | Tyranny of the accidental |

| Easy to predict from what you see and extend to what you do not see | Hard to predict from past information |

| History crawls | History makes jumps |

| Events are distributed according to the “bell curve” or its variations | The distribution is either Mandelbrotian “gray” Swans (tractable scientifically) or totally intractable Black Swans |

Taleb observes that Yevgenia’s rise from “the second basement to superstar” is only possible in Extremistan.

(Photo by Flavijus)

Taleb comments on knowledge and Extremistan:

What you can know from data in Mediocristan augments very rapidly with the supply of information. But knowledge in Extremistan grows slowly and erratically with the addition of data, some of it extreme, possibly at an unknown rate.

Taleb gives many examples:

Matters that seem to belong to Mediocristan (subjected to what we call type 1 randomness): height, weight, calorie consumption, income for a baker, a small restaurant owner, a prostitute, or an orthodontist; gambling profits (in the very special case, assuming the person goes to a casino and maintains a constant betting size), car accidents, mortality rates, “IQ” (as measured).

Matters that seem to belong to Extremistan (subjected to what we call type 2 randomness): wealth, income, book sales per author, book citations per author, name recognition as a “celebrity,” number of references on Google, populations of cities, uses of words in a vocabulary, numbers of speakers per language, damage caused by earthquakes, deaths in war, deaths from terrorist incidents, sizes of planets, sizes of companies, stock ownership, height between species (consider elephants and mice), financial markets (but your investment manager does not know it), commodity prices, inflation rates, economic data. The Extremistan list is much longer than the prior one.

Taleb concludes the chapter by introducing “gray” swans, which are rare and consequential, but somewhat predictable:

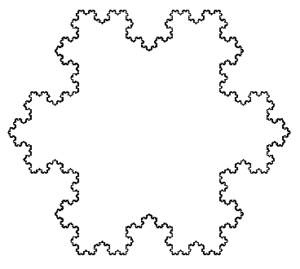

They are near-Black Swans. They are somewhat tractable scientifically””knowing about their incidence should lower your surprise; these events are rare but expected. I call this special case of “gray” swans Mandelbrotian randomness. This category encompasses the randomness that produces phenomena commonly known by terms such as scalable, scale-invariant, power laws, Pareto-Zipf laws, Yule’s law, Paretian-stable processes, Levy-stable, and fractal laws, and we will leave them aside for now since they will be covered in some depth in Part Three…

You can still experience severe Black Swans in Mediocristan, though not easily. How? You may forget that something is random, think that it is deterministic, then have a surprise. Or you can tunnel and miss on a source of uncertainty, whether mild or wild, owing to lack of imagination””most Black Swans result from this “tunneling” disease, which I will discuss in Chapter 9.

CHAPTER 4: ONE THOUSAND AND ONE DAYS, OR HOW NOT TO BE A SUCKER

Taleb introduces the Problem of Induction by using an example from the philosopher Bertrand Russell:

How can we logically go from specific instances to reach general conclusions? How do we know what we know? How do we know that what we have observed from given objects and events suffices to enable us to figure out their other properties? There are traps built into any kind of knowledge gained from observation.

Consider a turkey that is fed every day. Every single feeding will firm up the bird’s belief that it is a general rule of life to be fed every day by friendly members of the human race “looking out for its best interests,” as a politician would say. On the afternoon of the Wednesday before Thanksgiving, something unexpected will happen to the turkey. It will incur a revision of belief.

The rest of this chapter will outline the Black Swan problem in its original form: How can we know the future, given knowledge of the past; or, more generally, how can we figure out properties of the (infinite) unknown based on the (finite) known?

Taleb says that, as in the example of the turkey, the past may be worse than irrelevant. The past may be “viciously misleading.” The turkey’s feeling of safety reached its high point just when the risk was greatest.

Taleb gives the example of banking, which was seen and presented as “conservative,” based on the rarity of loans going bust. However, you have to look at the loans over a very long period of time in order to see if a given bank is truly conservative. Taleb:

In the summer of 1982, large American banks lost close to all their past earnings (cumulatively), about everything they ever made in the history of American banking””everything. They had been lending to South and Central American countries that all defaulted at the same time”””an event of an exceptional nature”… They are not conservative; just phenomenally skilled at self-deception by burying the possibility of a large, devastating loss under the rug. In fact, the travesty repeated itself a decade later, with the “risk-conscious” large banks once again under financial strain, many of them near-bankrupt, after the real-estate collapse of the early 1990s in which the now defunct savings and loan industry required a taxpayer-funded bailout of more than half a trillion dollars.

Taleb offers another example: the hedge fund Long-Term Capital Management (LTCM). The fund calculated risk using the methods of two Nobel Prize-winning economists. According to these calculations, risk of blowing up was infinitesimally small. But in 1998, LTCM went bankrupt almost instantly.

A Black Swan is always relative to your expectations. LTCM used science to create a Black Swan.

Taleb writes:

In general, positive Black Swans take time to show their effect while negative ones happen very quickly””it is much easier and much faster to destroy than to build.

Although the problem of induction is often called “Hume’s problem,” after the Scottish philosopher and skeptic David Hume, Taleb holds that the problem is older:

The violently antiacademic writer, and antidogma activist, Sextus Empiricus operated close to a millenium and a half before Hume, and formulated the turkey problem with great precision… We surmise that he lived in Alexandria in the second century of our era. He belonged to a school of medicine called “empirical,” since its practitioners doubted theories and causality and relied on past experience as guidance in their treatment, though not putting much trust in it. Furthermore, they did not trust that anatomy revealed function too obviously…

Sextus represented and jotted down the ideas of the school of the Pyrrhonian skeptics who were after some form of intellectual therapy resulting from the suspension of belief… The Pyrrhonian skeptics were docile citizens who followed customs and traditions whenever possible, but taught themselves to systematically doubt everything, and thus attain a level of serenity. But while conservative in their habits, they were rabid in their fight against dogma.

Taleb asserts that his main aim is how not to be a turkey.

In a way, all I care about is making a decision without being the turkey.

Taleb introduces the themes for the next five chapters:

-

- We focus on preselected segments of the seen and generalize from it to the unseen: the error of confirmation.

- We fool ourselves with stories that cater to our Platonic thirst for distinct patterns: the narrative fallacy.

- We behave as if the Black Swan does not exist: human nature is not programmed for Black Swans.

- What we see is not necessarily all that is there. History hides Black Swans from us and gives us a mistaken idea about the odds of these events: this is the distortion of silent evidence.

- We “tunnel”: that is, we focus on a few well-defined sources of uncertainty, on too specific a list of Black Swans (at the expense of the others that do not easily come to mind).

CHAPTER 5: CONFIRMATION SHMONFIRMATION!

Taleb asks about two hypothetical situations. First, he had lunch with O.J. Simpson and O.J. did not kill anyone during the lunch. Isn’t that evidence that O.J. Simpson is not a killer? Second, Taleb imagines that he took a nap on the railroad track in New Rochelle, New York. He didn’t die during his nap, so isn’t that evidence that it’s perfectly safe to sleep on railroad tracks? Of course, both of these situations are analogous to the 1,001 days during which the turkey was regularly fed. Couldn’t the turkey conclude that there’s no evidence of any sort of Black Swan?

The problem is that people confuse no evidence of Black Swans with evidence of no possible Black Swans. Just because there has been no evidence yet of any possible Black Swans does not mean that there’s evidence of no possible Black Swans. Taleb calls this confusion the round-trip fallacy, since the two statements are not interchangeable.

Taleb writes that our minds routinely simplify matters, usually without our being consciously aware of it. Note: In his book, Thinking, Fast and Slow, the psychologist Daniel Kahneman argues that System 1, our intuitive system, routinely oversimplifies, usually without our being consciously aware of it.

Taleb continues:

Many people confuse the statement “almost all terrorists are Moslems” with “almost all Moslems are terrorists.” Assume that the first statement is true, that 99 percent of terrorists are Moslems. This would mean that only about .001 percent of Moslems are terrorists, since there are more than one billion Moslems and only, say, ten thousand terrorists, one in a hundred thousand. So the logical mistake makes you (unconsciously) overestimate the odds of a randomly drawn individual Moslem person… being a terrorist by close to fifty thousand times!

Taleb comments:

Knowledge, even when it is exact, does not often lead to appropriate actions because we tend to forget what we know, or forget how to process it properly if we do not pay attention, even when we are experts.

Taleb notes that the psychologists Daniel Kahneman and Amos Tversky did a number of experiments in which they asked professional statisticians statistical questions not phrased as statistical questions. Many of these experts consistently gave incorrect answers.

Taleb explains:

This domain specificity of our inferences and reactions works both ways: some problems we can understand in their applications but not in textbooks; others we are better at capturing in the textbook than in the practical application. People can manage to effortlessly solve a problem in a social situation but struggle when it is presented as an abstract logical problem. We tend to use different mental machinery””so-called modules””in different situations: our brain lacks a central all-purpose computer that starts with logical rules and applies them equally to all possible situations.

Note: Again, refer to Daniel Kahneman’s book, Thinking, Fast and Slow. System 1 is the fast-thinking intuitive system that works effortlessly and often subconsciously. System 1 is often right, but sometimes very wrong. System 2 is the logical-mathematical system that can be trained to do logical and mathematical problems. System 2 is generally slow and effortful, and we’re fully conscious of what System 2 is doing because we have to focus our attention for it to operate. See: https://boolefund.com/cognitive-biases/

Taleb next writes:

An acronym used in the medical literature is NED, which stands for No Evidence of Disease. There is no such thing as END, Evidence of No Disease. Yet my experience discussing this matter with plenty of doctors, even those who publish papers on their results, is that many slip into the round-trip fallacy during conversation.

Doctors in the midst of the scientific arrogance of the 1960s looked down at mothers’ milk as something primitive, as if it could be replicated by their laboratories””not realizing that mothers’ milk might include useful components that could have eluded their scientific understanding””a simple confusion of absence of evidence of the benefits of mothers’ milk with evidence of absence of the benefits (another case of Platonicity as “it did not make sense” to breast-feed when we could simply use bottles). Many people paid the price for this naive inference: those who were not breast-fed as infants turned out to be at an increased risk of a collection of health problems, including a higher likelihood of developing certain types of cancer””there had to be in mothers’ milk some necessary nutrients that still elude us. Furthermore, benefits to mothers who breast-feed were also neglected, such as a reduction in the risk of breast cancer.

Taleb makes the following point:

I am not saying here that doctors should not have beliefs, only that some kinds of definitive, closed beliefs need to be avoided… Medicine has gotten better””but many kinds of knowledge have not.

Taleb defines naive empiricism:

By a mental mechanism I call naive empiricism, we have a natural tendency to look for instances that confirm our story and our vision of the world””these instances are always easy to find…

Taleb makes an important point here:

Even in testing a hypothesis, we tend to look for instances where the hypothesis proved true.

Daniel Kahneman has made the same point. System 1 (intuition) automatically looks for confirmatory evidence, but even System 2 (the logical-mathematical-rational system) naturally looks for evidence that confirms a given hypothesis. We have to train System 2 not only to do logic and math, but also to look for disconfirming rather than confirming evidence. Taleb says:

We can get closer to the truth by negative instances, not by verification! It is misleading to build a general rule from observed facts. Contrary to conventional wisdom, our body of knowledge does not increase from a series of confirmatory observations, like the turkey’s.

Taleb adds:

Sometimes a lot of data can be meaningless; at other times one single piece of information can be very meaningful. It is true that a thousand days cannot prove you right, but one day can prove you to be wrong.

Taleb introduces the philosopher Karl Popper and his method of conjectures and refutations. First you develop a conjecture (hypothesis). Then you focus on trying to refute the hypothesis. Taleb:

If you think the task is easy, you will be disappointed””few humans have a natural ability to do this. I confess that I am not one of them; it does not come naturally to me.

Our natural tendency, whether using System 1 or System 2, is to look only for corroboration. This is called confirmation bias.

There are exceptions, notes Taleb. Chess grand masters tend to look at where their move might be weak, whereas rookie chess players only look for confirmation. Similarly, George Soros developed a unique ability to look always for evidence that his current hypothesis is wrong. As a result of this and not getting attached to his opinions, Soros quickly exited many of his trades that wouldn’t have worked. Soros is one of the most successful macro investors ever.

Taleb observes that seeing a red mini Cooper actually confirms the statement that all swans are white. Why? Because if all swans are white, then all nonwhite objects are not swans; in other words, the statement “if it’s a swan, then it’s white” is logically equivalent to the statement “if it’s not white, then it’s not a swan” (since all swans are white). Taleb:

This argument, known as Hempel’s raven paradox, was rediscovered by my friend the (thinking) mathematician Bruno Dupire during one of our intense meditating walks in London””one of those intense walk-discussions, intense to the point of our not noticing the rain. He pointed to a red Mini and shouted, “Look, Nassim, look! No Black Swan!”

Again: Finding instances that confirm the statement “if it’s not white, then it’s not a swan” is logically equivalent to finding instances that confirm the statement “if it’s a swan, then it’s white.” So consider all the objects that confirm the statement “if it’s not white, then it’s not a swan”: red Mini’s, gray clouds, green cucumbers, yellow lemons, brown soil, etc. The paradox is that we seem to gain ever more information about swans by looking at an infinite series of nonwhite objects.

Taleb concludes the chapter by noting that our brains evolved to deal with a much more primitive environment than what exists today, which is far more complex.

…the sources of Black Swans today have multiplied beyond measurability. In the primitive environment they were limited to newly encountered wild animals, new enemies, and abrupt weather changes. These events were repeatable enough for us to have built an innate fear of them. This instinct to make inferences rather quickly, and to “tunnel” (i.e., focus on a small number of sources of uncertainty, or causes of known Black Swans) remains rather ingrained in us. This instinct, in a word, is our predicament.

CHAPTER 6: THE NARRATIVE FALLACY

Taleb introduces the narrative fallacy:

We like stories, we like to summarize, and we like to simplify, i.e., to reduce the dimension of matters… The [narrative] fallacy is associated with our vulnerability to overinterpretation and our predilection for compact stories over raw truths. It severely distorts our mental representation of the world; it is particularly acute when it comes to the rare event.

Taleb continues:

The narrative fallacy addresses our limited ability to look at sequences of facts without weaving an explanation into them, or, equivalently, forcing a logical link, an arrow of relationship, upon them. Explanations bind facts together. They make them all the more easily remembered; they help them make more sense. Where this propensity can go wrong is when it increases our impression of understanding.

Taleb clarifies:

To help the reader locate himself: in studying the problem of induction in the previous chapter, we examined what could be inferred about the unseen, what lies outside our information set. Here, we look at the seen, what lies within the information set, and we examine the distortions in the act of processing it.

Taleb observes that our brains automatically theorize and invent explanatory stories to explain facts. It takes effort NOT to invent explanatory stories.

Taleb mentions post hoc rationalization. In an experiment, women were asked to choose from among twelve pairs of nylon stockings the ones they preferred. Then they were asked for the reasons for their choice. The women came up with all sorts of explanations. However, all the stockings were in fact identical.

Split-brain patients have no connection between the left and right hemispheres of their brains. Taleb:

Now, say that you induced such a person to perform an act””raise his finger, laugh, or grab a shovel””in order to ascertain how how he ascribes a reason to his act (when in fact you know that there is no reason for it other than your inducing it). If you ask the right hemisphere, here isolated from the left side, to perform the action, then ask the other hemisphere for an explanation, the patient will invariably offer some interpretation: “I was pointing at the ceiling in order to…,” “I saw something interesting on the wall”…

Now, if you do the opposite, namely instruct the isolated left hemisphere of a right-handed person to perform an act and ask the right hemisphere for the reasons, you will be plainly told “I don’t know.”

Taleb notes that the left hemisphere deals with pattern recognition. (But, in general, Taleb warns against the common distinctions between the left brain and the right brain.)

Taleb gives another example. Read the following:

A BIRD IN THE

THE HAND IS WORTH

TWO IN THE BUSH

Notice anything unusual? Try reading it again. Taleb:

The Sydney-based brain scientist Alan Snyder… made the following discovery. If you inhibit the left hemisphere of a right-handed person (more technically, by directing low-frequency magnetic pulses into the left frontotemporal lobes), you will lower his rate of error in reading the above caption. Our propensity to impose meaning and concepts blocks our awareness of the details making up the concept. However, if you zap people’s left hemispheres, they become more realistic””they can draw better and with more verisimilitude. Their minds become better at seeing the objects themselves, cleared of theories, narratives, and prejudice.

Again, System 1 (intuition) automatically invents explanatory stories. System 1 automatically finds patterns, even when none exist.

Moreover, neurotransmitters, chemicals thought to transport signals between different parts of the brain, play a role in the narrative fallacy. Taleb:

It appears that pattern perception increases along with the concentration in the brain of the chemical dopamine. Dopamine also regulates moods and supplies an internal reward system in the brain (not surprisingly, it is found in slightly higher concentrations in the left side of the brains of right-handed persons than on the right side). A higher concentration of dopamine appears to lower skepticism and result in greater vulnerability to pattern detection; an injection of L-dopa, a substance used to treat patients with Parkinson’s disease, seems to increase such activity and lowers one’s suspension of belief. The person becomes vulnerable to all manner of fads…

Our memory of the past is impacted by the narrative fallacy:

Narrativity can viciously affect the remembrance of past events as follows: we will tend to more easily remember those facts from our past that fit a narrative, while we tend to neglect others that do not appear to play a causal role in that narrative. Consider that we recall events in our memory all the while knowing the answer of what happened subsequently. It is literally impossible to ignore posterior information when solving a problem. This simple inability to remember not the true sequence of events but a reconstructed one will make history appear in hindsight to be far more explainable than it actually was””or is.

Taleb again:

So we pull memories along causative lines, revising them involuntarily and unconsciously. We continuously renarrate past events in the light of what appears to make what we think of as logical sense after these events occur.

One major problem in trying to explain and predict the facts is that the facts radically underdetermine the hypotheses that logically imply those facts. For any given set of facts, there exist many theories that can explain and predict those facts. Taleb:

In a famous argument, the logician W.V. Quine showed that there exist families of logically consistent interpretations and theories that can match a given set of facts. Such insight should warn us that mere absence of nonsense may not be sufficient to make something true.

There is a way to escape the narrative fallacy. Develop hypotheses and then run experiments that test those hypotheses. Whichever hypotheses best explain and predict the phenomena in question can be provisionally accepted.

The best hypotheses are only provisionally true and they are never uniquely true. The history of science shows that nearly all hypotheses, no matter how well-supported by experiments, end up being supplanted. Odds are high that the best hypotheses of today””including general relativity and quantum mechanics””will be supplanted at some point in the future. For example, perhaps string theory will be developed to the point where it can predict the phenomena in question with more accuracy and with more generality than both general relativity and quantum mechanics.

Taleb continues:

Let us see how narrativity affects our understanding of the Black Swan. Narrative, as well as its associated mechanism of salience of the sensational fact, can mess up our projection of the odds. Take the following experiment conducted by Kahneman and Tversky… : the subjects were forecasting professionals who were asked to imagine the following scenarios and estimate their odds.

Which is more likely?

-

- A massive flood somewhere in America in which more than a thousand people die.

- An earthquake in California, causing massive flooding, in which more than a thousand people die.

Most of the forecasting professionals thought that the second scenario is more likely than the first scenario. But logically, the second scenario is a subset of the first scenario and is therefore less likely. It’s the vividness of the second scenario that makes it appear more likely. Again, in trying to understand these scenarios, System 1 can much more easily imagine the second scenario and so automatically views it as more likely.

Next Taleb defines two kinds of Black Swan:

…there are two varieties of rare events: a) the narrated Black Swans, those that are present in the current discourse and that you are likely to hear about on television, and b) those nobody talks about, since they escape models””those that you would feel ashamed discussing in public because they do not seem plausible. I can safely say that it is entirely compatible with human nature that the incidences of Black Swans would be overestimated in the first case, but severely underestimated in the second one.

CHAPTER 7: LIVING IN THE ANTECHAMBER OF HOPE

Taleb explains:

Let us separate the world into two categories. Some people are like the turkey, exposed to a major blowup without being aware of it, while others play reverse turkey, prepared for big events that might surprise others. In some strategies and life situations, you gamble dollars to win a succession of pennies while appearing to be winning all the time. In others, you risk a succession of pennies to win dollars. In other words, you bet either that the Black Swan will happen or that it will never happen, two strategies that require completely different mind-sets.

Taleb adds:

So some matters that belong to Extremistan are extremely dangerous but do not appear to be so beforehand, since they hide and delay their risks””so suckers think they are “safe.” It is indeed a property of Extremistan to look less risky, in the short run, than it really is.

Taleb describes a strategy of betting on the Black Swan:

…some business bets in which one wins big but infrequently, yet loses small but frequently, are worth making if others are suckers for them and if you have the personal and intellectual stamina. But you need such stamina. You also need to deal with people in your entourage heaping all manner of insult on you, much of it blatant. People often accept that a financial strategy with a small chance of success is not necessarily a bad one as long as the success is large enough to justify it. For a spate of psychological reasons, however, people have difficulty carrying out such a strategy, simply because it requires a combination of belief, a capacity for delayed gratification, and the willingness to be spat upon by clients without blinking.

CHAPTER 8: GIACOMO CASANOVA’S UNFAILING LUCK: THE PROBLEM OF SILENT EVIDENCE

Taleb:

Another fallacy in the way we understand events is that of silent evidence. History hides both Black Swans and its Black Swan-generating ability from us.

Taleb tells the story of the drowned worshippers:

More than two thousand years ago, the Roman orator, belletrist, thinker, Stoic, manipulator-politician, and (usually) virtuous gentleman, Marcus Tullius Cicero, presented the following story. One Diagoras, a nonbeliever in the gods, was shown painted tablets bearing the portraits of some worshippers who prayed, then survived a subsequent shipwreck. The implication was that praying protects you from drowning. Diagoras asked, “Where were the pictures of those who prayed, then drowned?”

This is the problem of silent evidence. Taleb again:

As drowned worshippers do not write histories of their experiences (it is better to be alive for that), so it is with the losers in history, whether people or ideas.

Taleb continues:

The New Yorker alone rejects close to a hundred manuscripts a day, so imagine the number of geniuses that we will never hear about. In a country like France, where more people write books while, sadly, fewer people read them, respectable literary publishers accept one in ten thousand manuscripts they receive from first-time authors. Consider the number of actors who have never passed an audition but would have done very well had they had that lucky break in life.

Luck often plays a role in whether someone becomes a millionaire or not. Taleb notes that many failures share the traits of the successes:

Now consider the cemetery. The graveyard of failed persons will be full of people who shared the following traits: courage, risk taking, optimism, et cetera. Just like the population of millionaires. There may be some differences in skills, but what truly separates the two is for the most part a single factor: luck. Plain luck.

Of course, there’s more luck in some professions than others. In investment management, there’s a great deal of luck. One way to see this is to run computer simulations. You can see that by luck alone, if you start out with 10,000 investors, you’ll end up with a handful of investors who beat the market for 10 straight years.

(Photo by Volodymyr Pyndyk)

Taleb then gives another example of silent evidence. He recounts reading an article about the growing threat of the Russian Mafia in the United States. The article claimed that the toughness and brutality of these guys were because they were strengthened by their Gulag experiences. But were they really strengthened by their Gulag experiences?

Taleb asks the reader to imagine gathering a representative sample of the rats in New York. Imagine that Taleb subjects these rats to radiation. Many of the rats will die. When the experiment is over, the surviving rats will be among the strongest of the whole sample. Does that mean that the radiation strengthened the surviving rats? No. The rats survived because they were stronger. But every rat will have been weakened by the radiation.

Taleb offers another example:

Does crime pay? Newspapers report on the criminal who get caught. There is no section in The New York Times recording the stories of those who committed crimes but have not been caught. So it is with cases of tax evasion, government bribes, prostitution rings, poisoning of wealthy spouses (with substances that do not have a name and cannot be detected), and drug trafficking.

In addition, our representation of the standard criminal might be based on the properties of those less intelligent ones who were caught.

Taleb next writes about politicians promising “rebuilding” New Orleans after Hurricane Katrina:

Did they promise to do so with the own money? No. It was with public money. Consider that such funds will be taken away from somewhere else… That somewhere else will be less mediatized. It may be… cancer research… Few seem to pay attention to the victims of cancer lying lonely in a state of untelevised depression. Not only do these cancer patients not vote (they will be dead by the next ballot), but they do not manifest themselves to our emotional system. More of them die every day than were killed by Hurricane Katrina; they are the ones who need us the most””not just our financial help, but our attention and kindness. And they may be the ones from whom the money will be taken””indirectly, perhaps even directly. Money (public or private) taken away from research might be responsible for killing them””in a crime that may remain silent.

Giacomo Casanova was an adventurer who seemed to be lucky. However, there have been plenty of adventurers, so some are bound to be lucky. Taleb:

The reader can now see why I use Casanova’s unfailing luck as a generalized framework for the analysis of history, all histories. I generate artificial histories featuring, say, millions of Giacomo Casanovas, and observe the difference between the attributes of the successful Casanovas (because you generate them, you know their exact properties) and those an observer of the result would obtain. From that perspective, it is not a good idea to be a Casanova.

CHAPTER 9: THE LUDIC FALLACY, OR THE UNCERTAINTY OF THE NERD

Taleb introduces Fat Tony (from Brooklyn):

He started as a clerk in the back office of a New York bank in the early 1980s, in the letter-of-credit department. He pushed papers and did some grunt work. Later he grew into giving small business loans and figured out the game of how you can get financing from the monster banks, how their bureaucracies operate, and what they like to see on paper. All the while an employee, he started acquiring property in bankruptcy proceedings, buying it from financial institutions. His big insight is that bank employees who sell you a house that’s not theirs just don’t care as much as the owners; Tony knew very rapidly how to talk to them and maneuver. Later, he also learned to buy and sell gas stations with money borrowed from small neighborhood bankers.

…Tony’s motto is “Finding who the sucker is.” Obviously, they are often the banks: “The clerks don’t care about nothing.” Finding these suckers is second nature to him.

Next Taleb introduces non-Brooklyn John:

Dr. John is a painstaking, reasoned, and gentle fellow. He takes his work seriously, so seriously that, unlike Tony, you can see a line in the sand between his working time and his leisure activities. He has a PhD in electrical engineering from the University of Texas at Austin. Since he knows both computers and statistics, he was hired by an insurance company to do computer simulations; he enjoys the business. Much of what he does consists of running computer programs for “risk management.”

Taleb imagines asking Fat Tony and Dr. John the same question: Assume that a coin is fair. Taleb flips the coin ninety-nine times and gets heads each time. What are the odds that the next flip will be tails?

(Photo by Christian Delbert)

Because he assumes a fair coin and the flips are independent, Dr. John answers one half (fifty percent). Fat Tony answers, “I’d say no more than 1 percent, of course.” Taleb questions Fat Tony’s reasoning. Fat Tony explains that the coin must be loaded. In other words, it is much more likely that the coin is loaded than that Taleb got ninety-nine heads in a row flipping a fair coin.

Taleb explains:

Simply, people like Dr. John can cause Black Swans outside Mediocristan””their minds are closed. While the problem is very general, one of its nastiest illusions is what I call the ludic fallacy””the attributes of the uncertainty we face in real life have little connection to the sterilized ones we encounter in exams and games.

Taleb was invited by the United States Defense Department to a brainstorming session on risk. Taleb was somewhat surprised by the military people:

I came out of the meeting realizing that only military people deal with randomness with genuine, introspective intellectual honesty””unlike academics and corporate executives using other people’s money. This does not show in war movies, where they are usually portrayed as war-hungry autocrats. The people in front of me were not the people who initiate wars. Indeed, for many, the successful defense policy is the one that manages to eliminate potential dangers without war, such as the strategy of bankrupting the Russians through the escalation in defense spending. When I expressed my amazement to Laurence, another finance person who was sitting next to me, he told me that the military collected more genuine intellects and risk thinkers than most if not all other professions. Defense people wanted to understand the epistemology of risk.

Taleb notes that the military folks had their own name for a Black Swan: unknown unknown. Taleb came to the meeting prepared to discuss a new phrase he invented: the ludic fallacy, or the uncertainty of the nerd.

(Photo by Franky44)

In the casino you know the rules, you can calculate the odds, and the type of uncertainty we encounter there, we will see later, is mild, belonging to Mediocristan. My prepared statement was this: “The casino is the only human venture I know where the probabilities are known, Gaussian (i.e., bell-curve), and almost computable.”…

In real life you do not know the odds; you need to discover them, and the sources of uncertainty are not defined.

Taleb adds:

What can be mathematized is usually not Gaussian, but Mandelbrotian.

What’s fascinating about the casino where the meeting was held is that the four largest losses incurred (or narrowly avoided) had nothing to do with gambling.

-

- First, they lost around $100 million when an irreplaceable performer in their main show was maimed by a tiger.

- Second, a disgruntled contractor was hurt during the construction of a hotel annex. He was so offended by the settlement offered him that he made an attempt to dynamite the casino.

- Third, a casino employee didn’t file required tax forms for years. The casino ended up paying a huge fine (which was the least bad alternative).

- Fourth, there was a spate of other dangerous scenes, such as the kidnapping of the casino owner’s daughter, which caused him, in order to secure cash for the ransom, to violate gambling laws by dipping into the casino coffers.

Taleb draws a conclusion about the casino:

A back-of-the-envelope calculation shows that the dollar value of these Black Swans, the off-model hits and potential hits I’ve just outlined, swamp the on-model risks by a factor of close to 1,000 to 1. The casino spent hundreds of millions of dollars on gambling theory and high-tech surveillance while the bulk of their risks came from outside their models.

All this, and yet the rest of the world still learns about uncertainty and probability from gambling examples.

Taleb wraps up Part One of his book:

We love the tangible, the confirmation, the palpable, the real, the visible, the concrete, the known, the seen, the vivid, the visual, the social, the embedded, the emotionally laden, the salient, the stereotypical, the moving, the theatrical, the romanced, the cosmetic, the official, the scholarly-sounding verbiage (b******t), the pompous Gaussian economist, the mathematicized crap, the pomp, the Academie Francaise, Harvard Business School, the Nobel Prize, dark business suits with white shirts and Ferragamo ties, the moving discourse, and the lurid. Most of all we favor the narrated.

Alas, we are not manufactured, in our current edition of the human race, to understand abstract matters””we need context. Randomness and uncertainty are abstractions. We respect what had happened, ignoring what could have happened.

PART TWO: WE JUST CAN’T PREDICT

Taleb:

…the gains in our ability to model (and predict) the world may be dwarfed by the increases in its complexity””implying a greater and greater role for the unpredicted.

CHAPTER 10: THE SCANDAL OF PREDICTION

Taleb highlights the story of the Sydney Opera House:

The Sydney Opera House was supposed to open in early 1963 at a cost of AU$ 7 million. It finally opened its doors more than ten years later, and, although it was a less ambitious version than initially envisioned, it ended up costing around AU$ 104 million.

Taleb then asks:

Why on earth do we predict so much? Worse, even, and more interesting: Why don’t we talk about our record in predicting? Why don’t we see how we (almost) always miss the big events? I call this the scandal of prediction.

The problem is that when our knowledge grows, our confidence about how much we know generally increases even faster.

Try the following quiz. For each question, give a range that you are 90 percent confident contains the correct answer.

-

- What was Martin Luther King, Jr.’s age at death?

- What is the length of the Nile river, in miles?

- How many countries belong to OPEC?

- How many books are there in the Old Testament?

- What is the diameter of the moon, in miles?

- What is the weight of an empty Boeing 747, in pounds?

- In what year was Mozart born?

- What is the gestation period of an Asian elephant, in days?

- What is the air distance from London to Tokyo, in miles?

- What is the deepest known point in the ocean, in feet?

If you’re not overconfident, then you should have gotten nine out of ten questions right because you gave a 90 percent confidence interval for each question. However, most people get more than one question wrong, which means most people are overconfident.

(Answers: 39, 4132, 12, 39, 2158.8, 390000, 1756, 645, 5959, 35994.)

A similar quiz is to randomly select some number, like the population of Egypt, and then ask 100 random people to give their 98 percent confidence interval. “I am 98 percent confident that the population of Egypt is between 40 million and 120 million.” If the 100 random people are not overconfident, then 98 out of 100 should get the question right. In practice, however, it turns out that a high number (15 to 30 percent) get the wrong answer. Taleb:

This experiment has been replicated dozens of times, across populations, professions, and cultures, and just about every empirical psychologist and decision theorist has tried it on his class to show his students the big problem of humankind: we are simply not wise enough to be trusted with knowledge. The intended 2 percent error rate usually turns out to be between 15 percent and 30 percent, depending on the population and the subject matter.

I have tested myself and, sure enough, failed, even while consciously trying to be humble by carefully setting a wide range… Yesterday afternoon, I gave a workshop in London… I decided to make a quick experiment during my talk.

I asked the participants to take a stab at a range for the number of books in Umberto Eco’s library, which, as we know from the introduction to Part One, contains 30,000 volumes. Of the sixty attendees, not a single one made the range wide enough to include the actual number (the 2 percent error rate became 100 percent).

Taleb argues that guessing some quantity you don’t know and making a prediction about the future are logically similar. We could ask experts who make predictions to give a confidence interval and then track over time how accurate their predictions are compared to the confidence interval.

Taleb continues:

The problem is that our ideas are sticky: once we produce a theory, we are not likely to change our minds””so those who delay developing their theories are better off. When you develop your opinions on the basis of weak evidence, you will have difficulty interpreting subsequent information that contradicts these opinions, even if this new information is obviously more accurate. Two mechanisms are at play here: …confirmation bias… and belief perseverance [also called consistency bias], the tendency not to reverse opinions you already have. Remember that we treat ideas like possessions, and it will be hard for us to part with them.

…the more detailed knowledge one gets of empirical reality, the more one will see the noise (i.e., the anecdote) and mistake it for actual information. Remember that we are swayed by the sensational.

Taleb adds:

…in another telling experiment, the psychologist Paul Slovic asked bookmakers to select from eighty-eight variables in past horse races those that they found useful in computing the odds. These variables included all manner of statistical information about past performances. The bookmakers were given the ten most useful variables, then asked to predict the outcome of races. Then they were given ten more and asked to predict again. The increase in the information set did not lead to an increase in their accuracy; their confidence in their choices, on the other hand, went up markedly. Information proved to be toxic.

When it comes to dealing with experts, many experts do have a great deal of knowledge. However, most experts have a high error rate when it comes to making predictions. Moreover, many experts don’t even keep track of how accurate their predictions are.

Another way to think about the problem is to try to distinguish those with true expertise from those without it. Taleb:

-

- Experts who tend to be experts: livestock judges, astronomers, test pilots, soil judges, chess masters, physicists, mathematicians (when they deal with mathematical problems, not empirical ones), accountants, grain inspectors, photo interpreters, insurance analysts (dealing with bell curve-style statistics).

- Experts who tend to be… not experts: stockbrokers, clinical psychologists, psychiatrists, college admissions officers, court judges, councilors, personnel selectors, intelligence analysts… economists, financial forecasters, finance professors, political scientists, “risk experts,” Bank for International Settlements staff, august members of the International Association of Financial Engineers, and personal financial advisors.

Taleb comments:

You cannot ignore self-delusion. The problem with experts is that they do not know what they do not know. Lack of knowledge and delusion about the quality of you knowledge come together””the same process that makes you know less also makes you satisfied with your knowledge.

Taleb asserts:

Our predictors may be good at predicting the ordinary, but not the irregular, and this is where they ultimately fail. All you need to do is miss one interest-rates move, from 6 percent to 1 percent in a longer-term projection (what happened between 2000 and 2001) to have all your subsequent forecasts rendered completely ineffectual in correcting your cumulative track record. What matters is not how often you are right, but how large your cumulative errors are.

And these cumulative errors depend largely on the big surprises, the big opportunities. Not only do economic, financial, and political predictors miss them, but they are quite ashamed to say anything outlandish to their clients””and yet events, it turns out, are almost always outlandish. Furthermore… economic forecasters tend to fall closer to one another than to the resulting outcome. Nobody wants to be off the wall.

Taleb notes a paper that analyzed two thousand predictions by brokerage-house analysts. These predictions didn’t predict anything at all. You could have done about as well by naively extrapolating the prior period to the next period. Also, the average difference between the forecasts was smaller than the average error of the forecasts. This indicates herding.

Taleb then writes about the psychologist Philip Tetlock’s research. Tetlock analyzed twenty-seven thousand predictions by close to three hundred specialists. The predictions took the form of more of x, no change in x, or less of x. Tetlock found that, on the whole, these predictions by experts were little better than chance. You could have done as well by rolling a dice.

Tetlock worked to discover why most expert predictors did not realize that they weren’t good at making predictions. He came up with several methods of belief defense:

-

- You tell yourself that you were playing a different game. Virtually no social scientist predicted the fall of the Soviet Union. You argue that the Russians had hidden the relevant information. If you’d had enough information, you could have predicted the fall of the Soviet Union. “It is not your skills that are to blame.”

- You invoke the outlier. Something happened that was outside the system. It was a Black Swan, and you are not supposed to predict Black Swans. Such events are “exogenous,” coming from outside your science. The model was right, it worked well, but the game turned out to be a different one than anticipated.

- The “almost right” defense. Retrospectively, it is easy to feel that it was a close call.

Taleb writes:

We attribute our successes to our skills, and our failures to external events outside our control, namely to randomness… This causes us to think that we are better than others at whatever we do for a living. Nine-four percent of Swedes believe that their driving skills put them in the top 50 percent of Swedish drivers; 84 percent of Frenchmen feel that their lovemaking abilities put them in the top half of French lovers.

Taleb observes that we tend to feel a little unique, unlike others. If we get married, we don’t consider divorce a possibility. If we buy a house, we don’t think we’ll move. People who lose their job often don’t expect it. People who try drugs don’t think they’ll keep doing it for long.

Taleb says:

Tetlock distinguishes between two types of predictors, the hedgehog and the fox, according to a distinction promoted by the essayist Isaiah Berlin. As in Aesop’s fable, the hedgehog knows one thing, the fox knows many things… Many of the prediction failures come from hedgehogs who are mentally married to a single big Black Swan event, a big bet that is not likely to play out. The hedgehog is someone focusing on a single, improbable, and consequential event, falling for the narrative fallacy that makes us so blinded by one single outcome that we cannot imagine others.

Taleb makes it clear that he thinks we should be foxes, not hedgehogs. Taleb has never tried to predict specific Black Swans. Rather, he wants to be prepared for whatever might come. That’s why it’s better to be a fox than a hedgehog. Hedgehogs are much worse, on the whole, at making predictions than foxes are.

Taleb mentions a study by Spyros Makridakis and Michele Hibon of predictions made using econometrics. They discovered that “statistically sophisticated or complex methods” are not clearly better than simpler ones.

Projects usually take longer and are more expensive than most people think. For instance, students regularly underestimate how long it will take them to complete a class project. Taleb then adds:

With projects of great novelty, such as a military invasion, an all-out war, or something entirely new, errors explode upward. In fact, the more routine the task, the better you learn to forecast. But there is always something nonroutine in our modern environment.

Taleb continues:

…we are too focused on matters internal to the project to take into account external uncertainty, the “unknown unknown,” so to speak, the contents of the unread books.

Another important bias to understand is anchoring. The human brain, relying on System 1, will grab on to any number, no matter how random, as a basis for guessing some other quantity. For example, Kahneman and Tversky spun a wheel of fortune in front of some people. What the people didn’t know was that the wheel was pre-programmed to either stop at “10” or “65.” After the wheel stopped, people were asked to write down their guess of the number of African countries in the United Nations. Predictably, those who saw “10” guessed a much lower number (25% was the average guess) than those who saw “65” (45% was the average guess).

Next, Taleb points out that life expectancy is from Mediocristan. It is not scalable. The longer we live, the less long we are expected to live. By contrast, projects and ventures tend to be scalable. The longer we have waited for some project to be completed, the longer we can be expected to have to wait from that point forward.

Taleb gives the example of a refugee waiting to return to his or her homeland. The longer the refugee has waited so far, the longer they should expect to have to wait going forward. Furthermore, consider wars: they tend to last longer and kill more people than expected. The average war may last six months, but if your war has been going on for a few years, expect at least a few more years.

Taleb argues that corporate and government projections have an obvious flaw: they do not include an error rate. There are three fallacies involved:

-

- The first fallacy: variability matters. For planning purposes, the accuracy of your forecast matters much more than the forecast itself, observes Taleb. Don’t cross a river if it is four feet deep on average. Taleb gives another example. If you’re going on a trip to a remote location, then you’ll pack different clothes if it’s supposed to be seventy degrees Fahrenheit with an expected error rate of forty degrees than if the margin of error was only five degrees. “The policies we need to make decisions on should depend far more on the range of possible outcomes than on the expected final number.”

- The second fallacy lies in failing to take into account forecast degradation as the projected period lengthens… Our forecast errors have traditionally been enormous…

- The third fallacy, and perhaps the gravest, concerns a misunderstanding of the random character of the variables being forecast. Owing to the Black Swan, these variables can accomodate far more optimistic””or far more pessimistic””scenarios than are currently expected.

Taleb points out that, as in the case of the depth of the river, what matters even more than the error rate is the worst-case scenario.

A Black Swan has three attributes: unpredictability, consequences, and retrospective explainability. Taleb next examines unpredictability.

CHAPTER 11: HOW TO LOOK FOR BIRD POOP

Taleb notes that most discoveries are the product of serendipity.

Taleb writes:

Take this dramatic example of a serendipitous discovery. Alexander Fleming was cleaning up his laboratory when he found that penicillium mold had contaminated one of his old experiments. He thus happened upon the antibacterial properties of penicillin, the reason many of us are alive today (including…myself, for typhoid fever is often fatal when untreated)… Furthermore, while in hindsight the discovery appears momentous, it took a very long time for health officials to realize the importance of what they had on their hands. Even Fleming lost faith in the idea before it was subsequently revived.

In 1965 two radio astronomists at Bell Labs in New Jersey who were mounting a large antenna were bothered by a background noise, a hiss, like the static that you hear when you have bad reception. The noise could not be eradicated””even after they cleaned the bird excrement out of the dish, since they were convinced that bird poop was behind the noise. It took a while for them to figure out that what they were hearing was the trace of the birth of the universe, the cosmic background microwave radiation. This discovery revived the big bang theory, a languishing idea that was posited by earlier researchers.

What’s interesting (but typical) is that the physicists””Ralph Alpher, Hans Bethe, and George Gamow””who conceived of the idea of cosmic background radiation did not discover the evidence they were looking for, while those not looking for such evidence found it.

Furthermore, observes Taleb:

When a new technology emerges, we either grossly underestimate or severely overestimate its importance. Thomas Watson, the founder of IBM, once predicted that there would be no need for more than just a handful of computers.

Taleb adds:

The laser is a prime illustration of a tool made for a given purpose (actually no real purpose) that then found applications that were not even dreamed of at the time. It was a typical “solution looking for a problem.” Among the early applications was the surgical stitching of detached retinas. Half a century later, The Economist asked Charles Townes, the alleged inventor of the laser, if he had had retinas on his mind. He had not. He was satisfying his desire to split light beams, and that was that. In fact, Townes’s colleagues teased him quite a bit about the irrelevance of his discovery. Yet just consider the effects of the laser in the world around you: compact disks, eyesight corrections, microsurgery, data storage and retrieval””all unforeseen applications of the technology.

Taleb mentions that the French mathematician Henri Poincare was aware that equations have limitations when it comes to predicting the future.

Poincare’s reasoning was simple: as you project into the future you may need an increasing amount of precision about the dynamics of the process that you are modeling, since your error rate grows very rapidly… Poincare showed this in a very simple case, famously known as the “three body problem.” If you have only two planets in a solar-style system, with nothing else affecting their course, then you may be able to indefinitely predict the behavior of these planets, no sweat. But add a third body, say a comet, ever so small, between the planets. Initially the third body will cause no drift, no impact; later, with time, its effects on the other two bodies may become explosive.

Our world contains far more than just three bodies. Therefore, many future phenomena are unpredictable due to complexity.

The mathematician Michael Berry gives another example: billiard balls. Taleb:

If you know a set of basic parameters concerning the ball at rest, can compute the resistance of the table (quite elementary), and can gauge the strength of the impact, then it is rather easy to predict what would happen at the first hit… The problem is that to correctly predict the ninth impact, you need to take into account the gravitational pull of someone standing next to the table… And to compute the fifty-sixth impact, every single elementary particle of the universe needs to be present in your assumptions!

Moreover, Taleb points out, in the billiard ball example, we don’t have to worry about free will. Nor have we incorporated relativity and quantum effects.

You can think rigorously, but you cannot use numbers. Poincare even invented a field for this, analysis in situ, now part of topology…

In the 1960s the MIT meteorologist Edward Lorenz rediscovered Poincare’s results on his own””once again, by accident. He was producing a computer model of weather dynamics, and he ran a simulation that projected a weather system a few days ahead. Later he tried to repeat the same simulation with the exact same model and what he thought were the same input parameters, but he got wildly different results… Lorenz subsequently realized that the consequential divergence in his results arose not from error, but from a small rounding in the input parameters. This became known as the butterfly effect, since a butterfly moving its wings in India could cause a hurricane in New York, two years later. Lorenz’s findings generated interest in the field of chaos theory.

Much economics has been developed assuming that agents are rational. However, Kahneman and Tversky have shown””in their work on heuristics and biases””that many people are less than fully rational. Kahneman and Tversky’s experiments have been repeated countless times over decades. Some people prefer apples to oranges, oranges to pears, and pears to apples. These people do not have consistent preferences. Furthermore, when guessing at an unknown quantity, many people will anchor on any random number even though the random number often has no relation to the quantity guessed at.

People also make different choices based on framing effects. Kahneman and Tversky have illustrated this with the following experiment in which 600 people are assumed to have a deadly disease.

First Kahneman and Tversky used a positive framing:

-

- Treatment A will save 200 lives for sure.

- Treatment B has a 33% chance of saving everyone and a 67% chance of saving no one.

With this framing, 72% prefer Treatment A and 28% prefer Treatment B.

Next a negative framing:

-

- Treatment A will kill 400 people for sure.

- Treatment B has a 33% chance of killing no one and a 67% chance of killing everyone.

With this framing, only 22% prefer Treatment A, while 78% prefer Treatment B.

Note: The two frames are logically identical, but the first frame focuses on lives saved, whereas the second frame focuses on lives lost.

Taleb argues that the same past data can confirm a theory and also its exact opposite. Assume a linear series of points. For the turkey, that can either confirm safety or it can mean the turkey is much closer to being turned into dinner. Similarly, as Taleb notes, each day you live can either mean that you’re more likely to be immortal or that you’re closer to death. Taleb observes that a linear regression model can be enormously misleading if you’re in Extremistan: Just because the data thus far appear to be in a straight line tells you nothing about what’s to come.

Taleb says the philosopher Nelson Goodman calls this the riddle of induction:

Let’s say that you observe an emerald. It was green yesterday and the day before yesterday. It is green again today. Normally this would confirm the “green” property: we can assume that the emerald will be green tomorrow. But to Goodman, the emerald’s color history could equally confirm the “grue” property. What is this grue property? The emerald’s grue property is to be green until some specified date… and then blue thereafter.

The riddle of induction is another version of the narrative fallacy””you face an infinity of “stories” that explain what you have seen. The severity of Goodman’s riddle of induction is as follows: if there is no longer even a single unique way to “generalize” from what you see, to make an inference about the unknown, then how should you operate? The answer, clearly, will be that you should employ “common sense,” but your common sense may not be so well developed with respect to some Extremistan variables.

CHAPTER 12: EPISTEMOCRACY, A DREAM

Taleb defines an epistemocrat as someone who is keenly aware that his knowledge is suspect. Epistemocracy is a place where the laws are made with human fallibility in mind. Taleb says that the major modern epistemocrat is the French philosopher Michel de Montaigne.

Montaigne is quite refreshing to read after the strains of a modern education since he fully accepted human weaknesses and understood that no philosophy could be effective unless it took into account our deeply ingrained imperfections, the limitations of our rationality, the flaws that make us human. It is not that he was ahead of his time; it would be better said that later scholars (advocating rationality) were backward.

Montaigne was not just a thinker, but also a doer. He had been a magistrate, a businessman, and the mayor of Bordeaux. Taleb writes that Montaigne was a skeptic, an antidogmatist.

So what would an epistemocracy look like?

The Black Swan asymmetry allows you to be confident about what is wrong, not about what you believe is right.

Taleb adds:

The notion of future mixed with chance, not a deterministic extension of your perception of the past, is a mental operation that our mind cannot perform. Chance is too fuzzy for us to be a category by itself. There is an asymmetry between past and future, and it is too subtle for us to understanding naturally.

The first consequence of this asymmetry is that, in people’s minds, the relationship between the past and the future does not learn from the relationship between the past and the past previous to it. There is a blind spot: when we think of tomorrow we do not frame it in terms of what we thought about yesterday or the day before yesterday. Because of this introspective defect we fail to learn about the difference between our past predictions and the subsequent outcomes. When we think of tomorrow, we just project it as another yesterday.

As yet another example of how we can’t predict, psychologists have shown that we can’t predict our future affective states in response to both pleasant and unpleasant events. The point is that we don’t learn from our past errors in predicting our future affective states. We continue to make the same mistake by overestimating the future impact of both pleasant and unpleasant events. We persist in thinking that unpleasant events will make us more unhappy than they actually do. We persist in thinking that pleasant events will make us happier than they actually do. We simply don’t learn from the fact that we made these erroneous predictions in the past.

Next Taleb observes that it’s not only that we can’t predict the future. We don’t know the past either. Taleb gives this example:

-

- Operation 1 (the melting ice cube): Imagine an ice cube and consider how it may melt over the next two hours while you play a few rounds of poker with your friends. Try to envision the shape of the resulting puddle.